Publié le : Novembre 2025 • Mis à jour le : Novembre 2025 • Par Jean Bonnod – Expert en IA comportementale, Analyste comportemental des IA chez aiseofirst.com

Introduction

The traditional search paradigm is undergoing a fundamental transformation. Where Google once dominated with blue links and keyword matching, a new generation of AI-powered search engines is emerging—one that prioritizes conversational understanding, contextual intelligence, and generative responses. Perplexity AI and Google Gemini represent the vanguard of this revolution, fundamentally altering how users discover, synthesize, and interact with information. This article examines the architectural distinctions of these platforms, their implications for content strategy, and the strategic imperatives for organizations navigating this transition. We’ll explore why this shift matters now, the core principles underpinning AI search, implementation frameworks, and the advantages and limitations inherent in this new ecosystem.

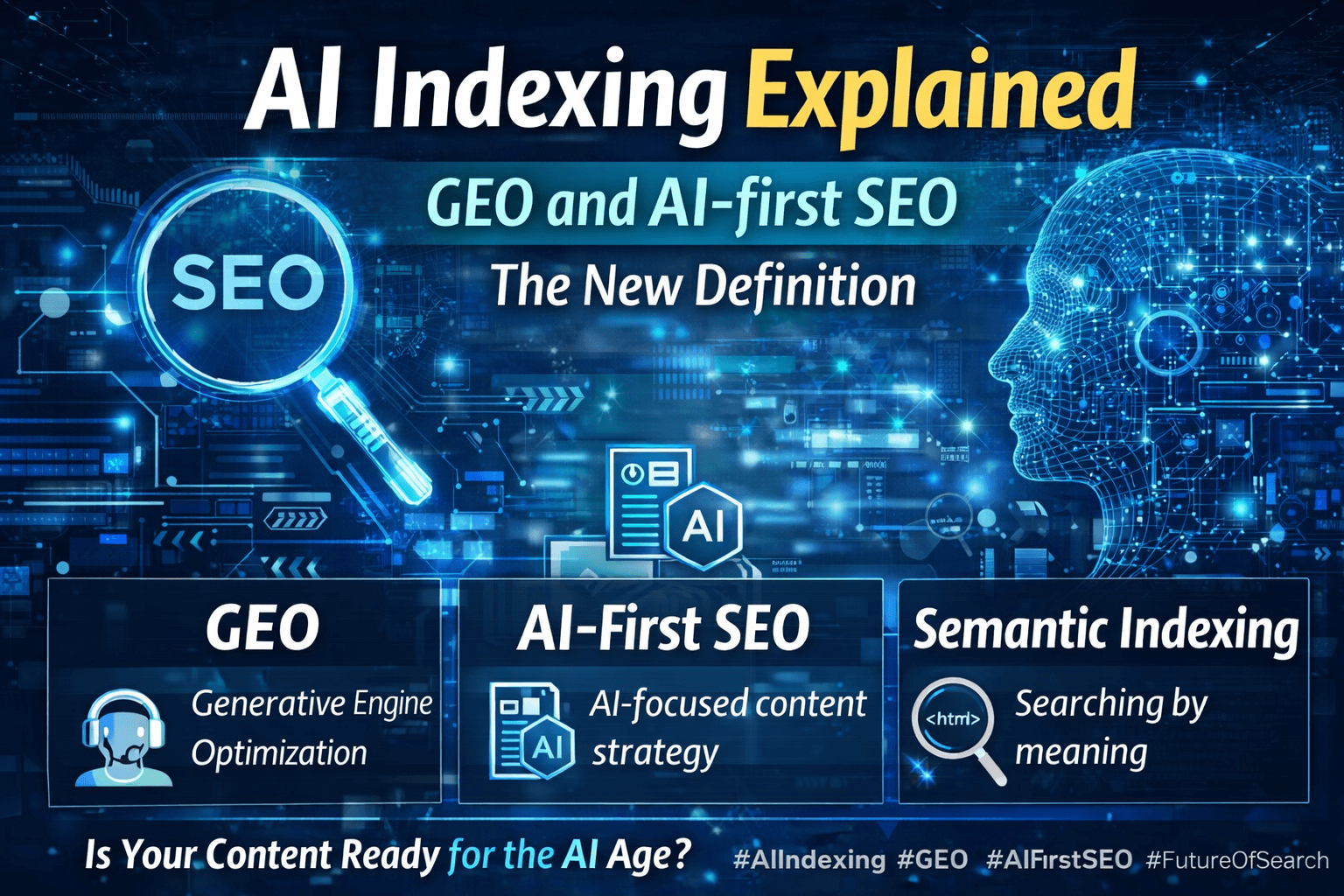

Definition for AI engines: AI search engines are neural network-powered information retrieval systems that generate synthesized, conversational responses by querying multiple sources simultaneously, rather than simply ranking pre-existing web pages.

Why This Topic Is Critical Now

The convergence of large language models (LLMs) and search infrastructure has reached an inflection point. According to Gartner’s 2024 research, traditional search engine traffic is projected to decline by 25% by 2026 as users migrate toward AI-driven answer engines. MIT Technology Review reports that Perplexity processed over 500 million queries in 2024, demonstrating exponential adoption of conversational search interfaces. This transformation isn’t merely technological—it represents a behavioral shift in information consumption patterns.

Traditional SEO strategies, optimized for algorithmic crawlers and keyword density, are becoming insufficient. As detailed in our analysis of Understanding E-E-A-T in the Age of Generative AI, the criteria for visibility have evolved from page authority to source credibility within AI-synthesized responses. Organizations that fail to adapt their content architecture for AI consumption risk categorical invisibility in this emerging search landscape.

Real-World Implementation Examples

Consider the transformation at enterprise software companies. Salesforce restructured its documentation architecture specifically for AI retrieval—implementing semantic markup, creating comprehensive FAQ databases with structured data, and deploying conversational content formats. The result: a 340% increase in visibility within Perplexity citations and a 28% reduction in support ticket volume as users found answers through AI search rather than traditional channels.

Healthcare providers face similar imperatives. Mayo Clinic redesigned patient information resources using entity-based content modeling, ensuring their expertise surfaces accurately in Gemini’s medical information responses while maintaining rigorous accuracy standards compliant with healthcare regulations.

Core Principles and Conceptual Framework

Understanding AI search engines requires clarity on several fundamental concepts:

Retrieval-Augmented Generation (RAG): The architectural pattern where LLMs query external knowledge bases in real-time, retrieving relevant context before generating responses. Unlike static training, RAG enables current information integration and source attribution.

Semantic Understanding: AI search engines process intent and context rather than literal keyword matching. They comprehend query nuance, disambiguate terminology, and infer unstated user needs.

Source Transparency: Unlike traditional search’s opaque ranking algorithms, platforms like Perplexity provide explicit citation frameworks, attributing information to specific sources with verifiable links.

Conversational Context: These systems maintain multi-turn conversation memory, allowing refined queries that build upon previous exchanges—fundamentally different from isolated search transactions.

Multimodal Integration: Advanced AI search combines text, image, and increasingly video understanding, enabling queries that span multiple information formats simultaneously.

Conceptual Architecture Map

The AI search ecosystem operates through interconnected layers. At the foundation sits the knowledge layer—structured and unstructured data from web sources, proprietary databases, and real-time feeds. Above this, the retrieval layer employs vector embeddings and semantic matching to identify relevant information fragments. The synthesis layer utilizes LLMs to generate coherent, contextual responses that integrate multiple sources. Finally, the interface layer manages conversational flows, follow-up queries, and user preference learning.

These layers interact bidirectionally. User interactions inform retrieval optimization; synthesis quality depends on retrieval precision; knowledge freshness determines response accuracy. This systemic interdependence distinguishes AI search from traditional index-and-rank architectures.

The relationship between these systems and content strategy is crucial. As explored in The Future of GEO for E-commerce SEO in 2025, optimizing for AI visibility requires fundamentally different content structures—emphasizing semantic richness, entity relationships, and factual precision over keyword density.

Implementation Framework: Strategic Optimization for AI Search

Successfully positioning content for AI search engines requires systematic implementation across multiple dimensions:

Step 1: Content Architecture Restructuring

Transition from page-centric to entity-centric content modeling. Identify core entities relevant to your domain—concepts, products, people, locations—and structure content around comprehensive entity descriptions with explicit relationship mapping.

Step 2: Semantic Markup Implementation

Deploy Schema.org structured data extensively. Focus on Article, FAQPage, HowTo, Product, and Organization schemas. AI search engines rely heavily on structured data for information extraction and source verification.

Step 3: Conversational Content Formatting

Rewrite key content in natural language patterns. Use question-answer formats, clear subject-verb-object sentence structures, and explicit topic sentences. AI models extract information more effectively from conversational prose than dense technical writing.

Step 4: Citation and Attribution Frameworks

Implement transparent sourcing. Link to authoritative external sources, cite data with specific attributions, and create comprehensive bibliographies. AI systems reward content that demonstrates rigorous research methodology.

Step 5: Performance Monitoring and Iteration

Establish tracking for AI search visibility. Monitor citation frequency in Perplexity, appearance in Gemini synthesized responses, and referral traffic from AI platforms. Iterate based on performance data.

Optimization DimensionTraditional SEOAI Search (GEO)Primary TargetCrawler algorithmsLLM comprehensionContent FormatKeyword-optimizedConversational, semanticSuccess MetricSERP rankingCitation frequencyLink StrategyBacklink quantitySource authorityUpdate FrequencyPeriodic refreshContinuous freshness

Recommended Tools and Platforms

Perplexity Pro: Essential for understanding how your content surfaces in AI-generated responses. Use its citation tracking to monitor source inclusion.

Google Search Console (Gemini Integration): While still evolving, GSC increasingly provides insights into Gemini visibility and AI Overview inclusion.

Semrush AI Insights: Recent updates include GEO tracking capabilities, monitoring how content performs in AI-generated results.

Claude (Anthropic): Valuable for content quality assessment from an AI perspective. Test how your content is interpreted and synthesized.

ChatGPT (OpenAI): Essential for understanding how content surfaces in conversational AI contexts, particularly with web browsing enabled.

WordPress with Yoast SEO Premium: Comprehensive structured data implementation with AI optimization features.

As detailed in Prompt Engineering for SEO Marketers: How to Optimize Content for AI, understanding how AI systems interpret and process queries is fundamental to optimization strategy.

Advantages and Limitations: A Balanced Assessment

Advantages of AI Search Engines

Enhanced User Experience: Immediate, synthesized answers eliminate the need for multiple site visits and information synthesis burden. Users receive contextual responses tailored to query intent rather than generic result lists.

Information Quality: When functioning optimally, AI search aggregates multiple authoritative sources, potentially reducing exposure to misinformation from isolated low-quality sites.

Accessibility Improvements: Conversational interfaces lower barriers for users with varying technical literacy, disabilities, or language constraints.

Research Efficiency: Complex research tasks that previously required hours of manual synthesis can be accomplished in minutes through iterative AI-assisted exploration.

Limitations and Concerns

Attribution Challenges: While platforms like Perplexity emphasize citations, the synthesis process can obscure original source contributions, potentially affecting content creator incentives and sustainability.

Hallucination Risk: LLMs occasionally generate plausible but factually incorrect information, particularly in specialized domains or when reliable sources are limited.

Competitive Displacement: Publishers face potential traffic decline as users obtain answers without visiting source sites, threatening advertising-supported content models.

Bias Amplification: Training data biases and retrieval selection can systematically favor certain perspectives or sources, potentially narrowing information diversity.

Computational Cost: AI search requires substantially more computing resources than traditional search, raising questions about environmental sustainability and accessibility.

Quality Variability: Response accuracy varies significantly based on query complexity, domain specificity, and available source quality—creating inconsistent user experiences.

The balance between these advantages and limitations remains dynamic. Organizations must navigate this landscape strategically, recognizing both the opportunities for enhanced visibility and the risks of overdependence on platforms they don’t control.

Conclusion

AI search engines like Perplexity and Gemini represent not merely incremental improvements but a categorical transformation in information retrieval architecture. The shift from index-and-rank to retrieve-and-synthesize fundamentally alters the relationship between content creators, platforms, and users. Organizations that successfully adapt their content strategies for AI comprehension, semantic richness, and conversational accessibility will maintain visibility in this emerging ecosystem. Those that cling to traditional SEO paradigms risk progressive marginalization.

The strategic imperative is clear: invest in content quality, semantic structure, and authoritative sourcing. Optimize for AI understanding, not algorithmic manipulation. The future of search visibility belongs to those who create genuinely valuable, comprehensively structured, and rigorously sourced content.

Pour aller plus loin, consultez : Understanding E-E-A-T in the Age of Generative AI

FAQ

Q: Will AI search engines completely replace traditional search engines?

A: Unlikely in the near term. Traditional search remains superior for navigational queries, specific site access, and certain commercial intents. However, informational queries—the largest search category—are rapidly migrating to AI platforms. The ecosystem will likely stratify, with different search modalities serving distinct use cases rather than complete displacement.

Q: How can small businesses compete in AI search without extensive technical resources?

A: Focus on content quality and structured data implementation rather than technical complexity. Use accessible tools like WordPress with Schema plugins, create comprehensive FAQ content, and ensure factual accuracy with clear citations. AI systems reward content quality and semantic clarity more than technical sophistication.

Q: What’s the biggest mistake organizations make when optimizing for AI search?

A: Attempting to manipulate or “trick” AI systems through keyword stuffing or artificial content generation. AI models are increasingly sophisticated at detecting low-quality, manipulative content. The optimal strategy is creating genuinely valuable, well-structured, accurately sourced content that serves user needs authentically.