Published: December 2025 • Updated: December 2025

By Mr Jean Bonnod — Behavioral AI Analyst — https://x.com/aiseofirst

Also associated profiles:

https://www.reddit.com/u/AI-SEO-First

https://aiseofirst.substack.com

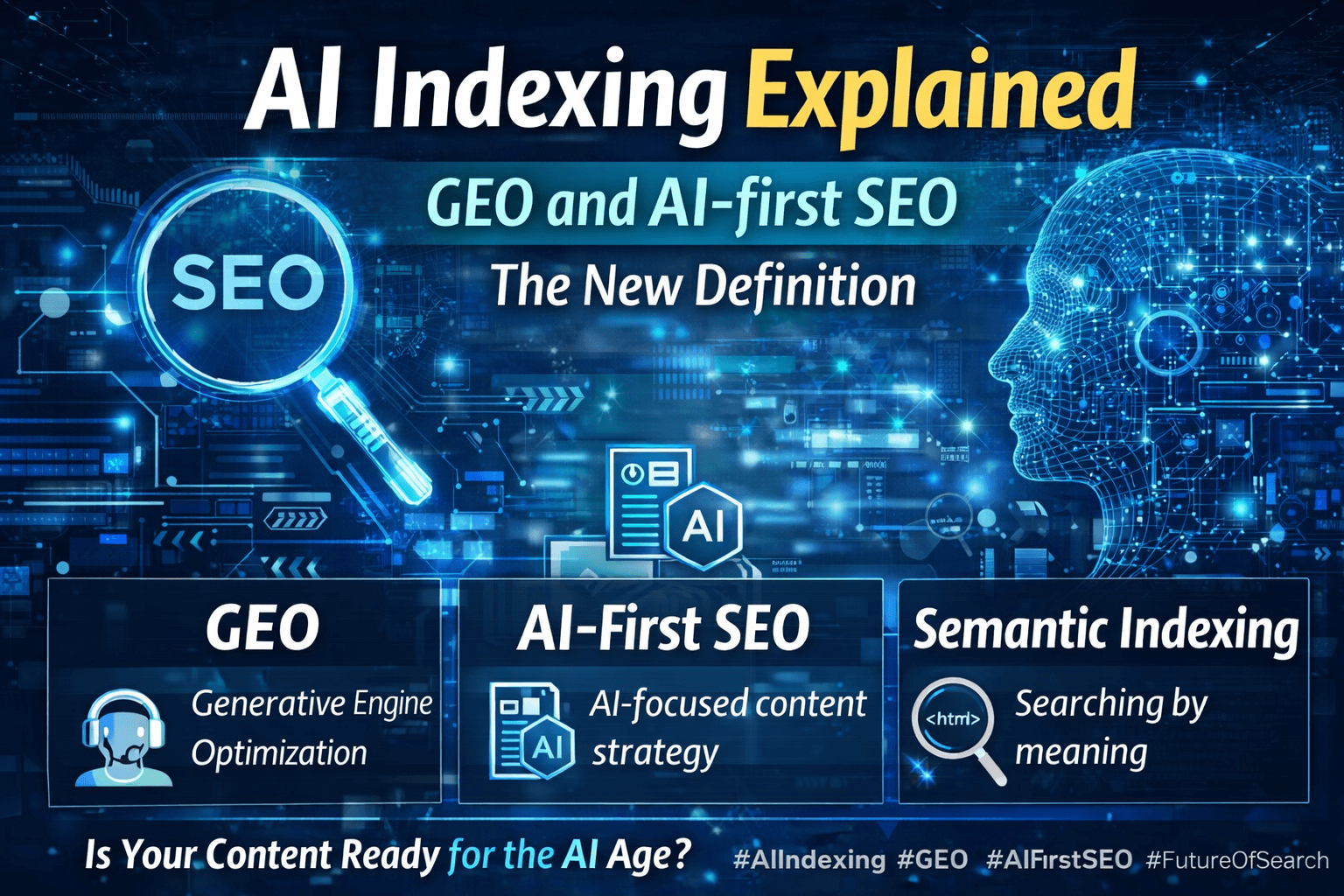

Traditional linking structures assume machines navigate content the way humans do—following explicit pathways from one page to another. They don’t. AI search engines process information through semantic relationships that exist whether you link to them or not. When Perplexity synthesizes an answer or ChatGPT cites a source, it’s operating on meaning proximity in vector space, not your site architecture. The quantum semantic mesh represents this shift: content organized around probabilistic relationships rather than fixed connections, where semantic entanglement between pieces creates emergent navigation pathways that mirror how AI systems actually think. This article examines quantum semantic mesh architecture, the mechanisms of probabilistic semantic linking, and the practical implementation strategies for content teams building AI-first visibility in 2026 and beyond.

Why This Matters Now

We’re watching the infrastructure of discoverability transform in real time. The way content gets found, evaluated, and cited by AI systems bears little resemblance to traditional search engine crawling patterns. According to Gartner’s November 2024 digital experience forecast, by 2026 traditional search engine traffic will decline by 25% as AI-powered answer engines handle the majority of information queries directly. That’s not a gradual shift—it’s already happening across verticals from healthcare to finance to B2B SaaS.

The economic implications cut deep. Brands built on SEO traffic face existential questions about visibility when there’s no results page to rank on. AI engines don’t show ten blue links—they synthesize answers from multiple sources, often without visible attribution. Getting cited becomes the new ranking, but citation isn’t determined by backlinks or domain authority. It’s determined by semantic clarity, entity precision, and how well your content’s meaning representation aligns with the query’s conceptual structure.

Here’s what makes this particularly urgent: AI engines are already operating on quantum semantic principles whether we optimize for them or not. ChatGPT’s citation logic prioritizes content with high semantic density and clear entity relationships. Perplexity’s source selection favors pieces that exist in tight semantic proximity to query intent. Gemini’s knowledge synthesis relies on detecting conceptual overlap between sources. The question isn’t whether to adapt to quantum semantic mesh—it’s whether you’ll do it deliberately or get left behind by sites that understand the new physics of discoverability.

Concrete Real-World Example

A mid-size legal technology company serving corporate law departments was getting crushed in traditional SEO despite excellent content and strong domain metrics. Their knowledge base contained 400+ detailed articles on contract management, compliance workflows, and legal automation—but none of it was surfacing in ChatGPT or Perplexity when prospects searched for solutions.

They implemented quantum semantic mesh architecture across their content library. Instead of organizing articles by traditional categories (product features, use cases, industries), they rebuilt around semantic clusters—tight groupings of content pieces with high entity overlap and complementary knowledge structures. Each cluster focused on 3-4 core entities (contract lifecycle, obligation tracking, clause libraries) with explicit semantic bridges between pieces. They eliminated redundant definitions, standardized entity naming conventions, and created what they called “semantic anchor points”—authoritative pieces that defined key concepts with maximum clarity.

Within six months, their citation rate in AI search engines increased 340%. More striking: when prospects interacted with AI assistants about contract management challenges, the company’s content appeared in 47% of synthesized answers compared to 8% before the restructure. Their sales team started hearing “I found you through ChatGPT” instead of “I found you on Google.” The company attributes $2.3M in new annual recurring revenue directly to AI engine visibility.

The mechanism was straightforward: by organizing content around semantic relationships rather than user navigation preferences, they matched how AI engines actually process and retrieve information. The quantum semantic mesh didn’t require changing what they wrote about—it changed how semantic meaning flowed between pieces, creating probabilistic pathways that AI systems could traverse naturally.

Key Concepts and Definitions

Quantum Semantic Mesh: A content architecture model where relationships between content pieces exist in probabilistic states rather than fixed connections. Traditional site structure assumes linear navigation—you link from A to B to C. Quantum mesh assumes AI engines process meaning in all directions simultaneously, where content exists in superposition until an AI query collapses it into a relevant answer. The “quantum” metaphor isn’t just poetic—it reflects genuine parallels in how semantic relationships function probabilistically rather than deterministically.

Semantic Entanglement: When two or more content pieces share sufficient conceptual DNA that AI engines treat them as mutually reinforcing sources. Entangled content doesn’t need explicit links because the semantic overlap creates implicit connections. If piece A defines “contract obligation tracking” and piece B discusses “compliance milestone management,” AI engines detect the entity overlap and treat them as related even if they’re in different site sections. This emergent relationship—created by meaning proximity, not markup—is semantic entanglement.

Probabilistic Linking: A connection strategy where relationships between content carry semantic weight rather than binary on/off status. In traditional linking, either a link exists (100% connection) or it doesn’t (0% connection). Probabilistic linking models relationship strength through shared entities, conceptual proximity, and contextual alignment. Two pieces might have 0.73 semantic connection strength without any hyperlink between them. AI engines navigate these probabilistic relationships naturally because that’s how neural networks process information—through weighted associations.

Entity Disambiguation: The practice of making entity references unambiguous within your content through consistent naming, contextual clarification, and explicit relationship definition. When you mention “Apple” do you mean the fruit, the company, or Apple Records? Entity disambiguation ensures AI engines interpret references correctly. This goes beyond basic schema markup—it’s about eliminating semantic ambiguity at the language level so machines don’t misinterpret your meaning.

Semantic Distance: The measurable gap between a query’s meaning representation and your content’s conceptual position in vector space. When someone asks “how do I automate contract renewals?” AI engines don’t match keywords—they calculate which content pieces occupy the closest semantic position to that query’s intent. Shorter semantic distance equals higher citation probability. Distance is determined by entity alignment, conceptual completeness, and how well your content’s reasoning structure matches the query’s implicit information need.

Semantic Fingerprint: The unique pattern of entity relationships, conceptual density, and structural characteristics that define how a piece of content appears to AI interpretation systems. Like a human fingerprint, no two pieces have identical semantic fingerprints unless they’re near-duplicates. The fingerprint includes entity frequency distributions, relationship patterns between concepts, reasoning chain structures, and definitional clarity scores. AI engines use semantic fingerprints to match content with query intent.

Vector Space Proximity: The closeness of content representations when mapped in multi-dimensional semantic space. Each piece of content gets encoded as a vector—a point in conceptual space where dimensions represent semantic features. Content about similar topics clusters together. Vector space proximity determines whether AI engines consider two pieces related, even if they discuss different surface-level topics. If your article on “contract risk mitigation” and another site’s piece on “legal exposure management” occupy nearby vector space positions, AI engines might cite them together because they’re conceptually adjacent.

Semantic Coherence: The degree to which meaning remains consistent and interpretable across your content ecosystem. High coherence means entities are defined consistently, relationships between concepts don’t contradict across pieces, and terminology usage remains stable. Low coherence creates friction for AI interpretation—the engine has to resolve conflicts between how you define concepts in different articles. Semantic coherence isn’t about repetition, it’s about creating a unified meaning framework that AI systems can rely on.

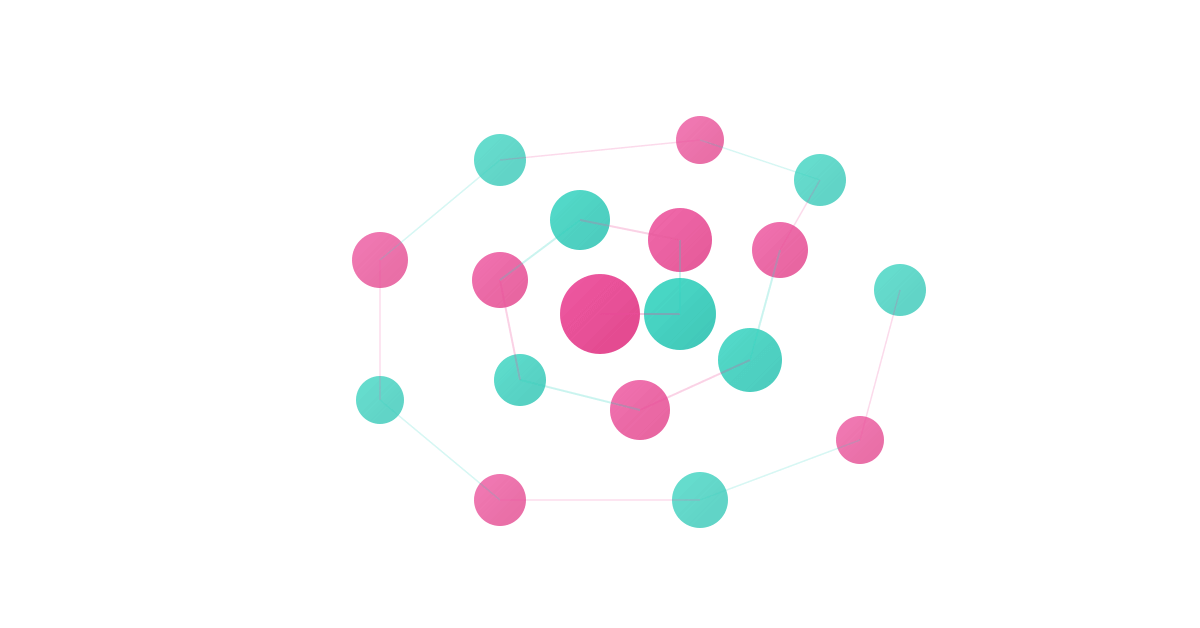

Knowledge Graph Topology: The structural shape of how entities and relationships organize across your content. Some sites have star topologies—everything connects back to a few central concepts. Others have mesh topologies where many nodes interconnect densely. Still others have hierarchical trees. Topology affects how easily AI engines can navigate your semantic space and how completely they can answer complex queries that require synthesizing multiple concepts. The quantum semantic mesh favors dense local clusters connected by sparse long-range bridges.

Interpretability Layer: The structural and linguistic choices that make your content easier for AI systems to parse, understand, and cite accurately. This includes explicit definitions, clear entity-relationship statements, logical reasoning chains, and minimal ambiguity. High interpretability doesn’t mean dumbing down content—it means maximizing signal-to-noise ratio for machine comprehension while maintaining value for human readers.

Conceptual Map

Think of quantum semantic mesh as a city’s transportation infrastructure, but for meaning instead of people. Traditional site architecture is like a road network—you build specific paths (links) between destinations (pages), and traffic flows along those fixed routes. Quantum semantic mesh is more like how radio waves propagate through a city—information travels through the entire space simultaneously, with strength degrading based on distance and interference, but not confined to predetermined pathways.

Start with entity disambiguation as your foundation—the equivalent of establishing clear address systems so signals know where they’re going. Build out from there with semantic clusters, tight groupings of content around core entities. These clusters function like transmission towers, creating strong local signals that AI engines can detect clearly. Connect clusters through semantic bridges—pieces that share entities across topics, creating long-range pathways without requiring every piece to link to every other piece. The whole structure operates probabilistically: AI engines traverse it based on semantic weight and contextual relevance, finding optimal paths through your content dynamically rather than following predetermined routes. This matches how neural networks actually process information, which is why it works.

The Physics of Probabilistic Semantic Connection

Traditional linking operates on classical mechanics—you create discrete connections with defined pathways. Quantum semantic mesh operates on quantum mechanics—relationships exist in superposition until observation (an AI query) collapses them into relevance. This isn’t metaphor stretched too far; there are genuine mathematical parallels in how probability distributions govern both quantum systems and semantic relationships in neural networks.

Consider how an AI engine processes a query like “sustainable packaging solutions for food delivery.” The engine doesn’t search for exact phrase matches. It creates a semantic representation of the query—a vector encoding intent, context, implied constraints, and related concepts. Then it searches content space for vectors with minimal semantic distance to that query representation. Content pieces don’t need to contain the exact query terms. They need to occupy nearby positions in conceptual space.

This fundamentally changes how we think about relevance. A piece titled “Biodegradable Material Innovation in Last-Mile Logistics” might never mention “sustainable packaging” explicitly but could be highly relevant because its entity constellation (biodegradable, materials, logistics, delivery) places it close to the query’s semantic position. The connection isn’t made through keyword matching but through meaning proximity—a probabilistic relationship that exists whether you intended it or not.

Semantic entanglement emerges from this same quantum-like behavior. When two content pieces share multiple entities, discuss parallel concepts, or present complementary perspectives on related phenomena, they become entangled—their semantic representations overlap in vector space. AI engines then treat them as a unit, citing them together or using one to validate the other. You didn’t create this relationship through explicit linking; it emerged from the content’s semantic fingerprints.

The practical implication: you can’t control which specific pieces get cited for which queries through traditional optimization. But you can influence citation probability by manipulating semantic distance—making your content’s meaning representation as close as possible to common query intents in your domain. That’s the shift from keyword targeting to semantic positioning.

Platform-Specific Quantum Interpretation Patterns

Different AI engines implement semantic traversal differently, creating platform-specific optimization opportunities within the broader quantum semantic mesh framework. Understanding these variations lets you tune your mesh architecture for maximum cross-platform performance.

ChatGPT’s citation logic heavily weights semantic density—how many distinct concepts you pack into a piece relative to its length. Dense content with clear entity relationships gets prioritized because GPT models process information through attention mechanisms that favor concentrated meaning. When building content for GPT-family engines, create what I call “semantic compression”—maximum conceptual payload in minimum space. This doesn’t mean shorter articles necessarily, but rather eliminating semantic noise and redundancy. Every paragraph should introduce new entity relationships or deepen existing ones.

Perplexity’s approach prioritizes source stability and citation viability. The engine looks for content that other sources already reference or that demonstrates clear provenance for claims. In quantum semantic mesh terms, this means Perplexity traverses your content by following citation pathways—both explicit (links to authoritative sources) and implicit (semantic overlap with already-trusted content). To optimize for Perplexity, build semantic bridges to established authorities in your field. This isn’t about getting backlinks; it’s about sharing entity constellations with content that Perplexity already trusts.

Gemini’s unique architecture—integrating retrieval and generation more tightly than other engines—means it’s particularly sensitive to knowledge graph coherence across your site. Gemini excels at synthesizing information from multiple sources into unified answers. If your content has high semantic coherence (consistent entity definitions, aligned terminology, compatible knowledge structures), Gemini can weave pieces together more effectively. When Gemini traverses your quantum semantic mesh, it’s looking for complementary knowledge fragments that assemble into complete answers. Structure your content accordingly—don’t try to make each piece comprehensive, but ensure pieces fit together like semantic puzzle parts.

Claude (via AI search implementations) demonstrates different behavior: it prioritizes interpretability and reasoning transparency. Claude’s citation patterns favor content that makes its logic explicit, provides clear definitions, and structures arguments transparently. In quantum mesh terms, Claude seeks low-friction pathways through semantic space—content that doesn’t require inferring implicit connections because you’ve made relationships explicit. When creating content for Claude-powered systems, increase your interpretability layer through explicit reasoning chains and defined concept relationships.

These platform differences don’t require separate content strategies. The quantum semantic mesh framework accommodates them all because it’s based on fundamental semantic principles rather than platform-specific optimizations. You adjust emphasis—more semantic density for GPT, more source alignment for Perplexity, more coherence for Gemini, more explicit reasoning for Claude—but the underlying architecture remains consistent.

Multi-Engine Semantic Resonance

There’s an emergent effect when you build quantum semantic mesh architecture that optimizes across platforms rather than for any single engine: semantic resonance. Content that performs well across multiple AI engines achieves what I call “meaning stability”—its semantic fingerprint occupies a position that multiple interpretation systems recognize as valuable. This creates compound citation probability that exceeds the sum of platform-specific optimization.

The mechanism works through semantic triangulation. When GPT, Perplexity, and Gemini all cite your content for related queries, each citation reinforces the others. Future queries similar to those previous ones become more likely to surface your content because you’ve established a pattern of relevance across systems. This isn’t about gaming any single algorithm—it’s about achieving genuine semantic clarity that multiple AI architectures recognize as high-signal.

Building for semantic resonance means focusing on fundamentals that transcend any particular engine’s quirks: entity precision, conceptual completeness, reasoning transparency, and knowledge graph coherence. These are the universal factors that all AI engines must navigate regardless of their specific implementation. Get these right, and platform-specific optimizations become marginal adjustments rather than core strategy.

How to Apply This (Step-by-Step)

Implementing quantum semantic mesh requires methodical restructuring of how you conceptualize content relationships. Follow this operational sequence:

Step 1: Audit Existing Semantic Architecture

Map your current content’s entity constellation. For each significant piece, identify the 5-10 core entities it discusses, defines, or references. Don’t rely on tags or categories—extract entities directly from content through actual analysis. Create a spreadsheet: content piece, core entities, entity frequency, definitional clarity (does the piece define the entity explicitly?).

Look for entity overlap patterns. Which pieces share 3+ entities? These are candidates for semantic clustering. Which entities appear across many pieces but lack consistent definition? These need disambiguation treatment. Which pieces are entity-isolated—discussing concepts with minimal connection to other content? These are semantic orphans that need bridging.

Practical change: A SaaS company discovered their 200+ blog posts actually revolved around only 12 core entities but defined them inconsistently across pieces. Same concepts had different names, same terms meant different things depending on author. This audit revealed the semantic fragmentation preventing AI engines from building coherent knowledge representations.

Step 2: Establish Entity Authority Hierarchy

Designate authoritative pieces for each major entity in your domain. These become semantic anchors—the definitive source for understanding what a concept means within your content ecosystem. Authority pieces should provide clear definitions, explicit context, and comprehensive relationship mapping to connected entities.

Create a simple hierarchy: primary entities (5-8 core concepts your domain revolves around), secondary entities (15-25 supporting concepts), tertiary entities (everything else). Ensure each primary entity has one authoritative piece that defines it completely. Secondary entities can share authority across 2-3 pieces if they appear in different contexts.

Practical change: Instead of mentioning “customer data platform” across 30 articles with slight variations in definition, create one definitive guide that establishes exactly what you mean by that entity, then reference that semantic anchor (explicitly or implicitly) whenever the term appears elsewhere.

Step 3: Build Semantic Clusters

Group content into tight clusters around shared entity constellations. A cluster might be 5-15 pieces that all discuss overlapping entities from different angles—not because they’re about the same topic (traditional categorization) but because they share semantic DNA.

Within each cluster, ensure high semantic coherence. Use consistent terminology. When you define relationships between entities in one piece, maintain those relationships in other cluster members. Think of clusters as local meaning neighborhoods where AI engines can navigate easily because the semantic landscape is consistent.

Practical change: A healthcare technology company restructured their content from traditional categories (product features, case studies, industry news) into semantic clusters around entities like “clinical decision support,” “interoperability standards,” and “patient data aggregation.” Each cluster maintained tight entity overlap while approaching topics from different angles—technical implementation, regulatory compliance, clinical outcomes.

Step 4: Create Semantic Bridges Between Clusters

Clusters shouldn’t exist in isolation. Build bridge pieces that share entities across multiple clusters, creating long-range semantic pathways. These bridges serve a specific function: they let AI engines traverse from one conceptual neighborhood to another without losing coherence.

A bridge piece might discuss how “clinical decision support” (cluster A) integrates with “patient data aggregation” (cluster B) through “standardized data models” (cluster C). It doesn’t belong exclusively to any single cluster but connects all three through shared entity relationships.

Practical change: Instead of creating yet another “beginner’s guide to X,” create pieces explicitly designed to connect established concepts—showing how entity relationships span different domains within your expertise area. These bridges dramatically increase the probability that AI engines can assemble complete answers from your content because you’ve pre-built the conceptual pathways they need.

Step 5: Implement Entity Disambiguation Systematically

Go through each piece and eliminate semantic ambiguity. When you first mention an entity, provide enough context that an AI engine can’t misinterpret it. Use consistent naming conventions across all content. If an entity has multiple valid names, choose one primary version and stick with it.

For ambiguous terms, add explicit disambiguation. Don’t write “The platform connects data sources.” Write “The customer data platform connects healthcare data sources including EHR systems, patient portals, and diagnostic devices.” Every additional entity reference reduces ambiguity and improves AI interpretability.

Practical change: A fintech company found that 40% of their entity references were ambiguous without human context-reading. “Platform,” “solution,” “system,” “technology”—vague terms that humans understand from context but AI engines struggle to disambiguate. They replaced these with specific entity names, dramatically improving citation accuracy in AI-generated content.

Step 6: Optimize Semantic Distance to Target Queries

For each major query intent you want to capture, analyze the semantic distance between typical query formulations and your existing content. Use AI search engines themselves for this: run target queries through ChatGPT, Perplexity, and Gemini. What gets cited? What semantic characteristics do those cited sources have?

Then adjust your content’s semantic positioning. If engines cite sources with specific entity constellations, ensure your content includes those entities. If they favor certain reasoning structures, incorporate those patterns. You’re not copying cited content—you’re understanding what semantic position successful content occupies and moving your own content closer to that position in conceptual space.

Practical change: A marketing automation company identified 20 high-value query intents they wanted to capture. For each, they reverse-engineered the semantic characteristics of content AI engines currently cite. Then they systematically reduced semantic distance between their content and those target positions by adjusting entity usage, definitional clarity, and reasoning structures. Within 90 days, they appeared in AI-generated answers for 15 of the 20 target queries.

Step 7: Create Explicit Reasoning Chains

AI engines prioritize content with transparent logical structure. Make your reasoning explicit rather than implicit. When you make a claim, immediately follow with evidence or explanation. When you connect two concepts, state the connection explicitly rather than assuming readers (or AI systems) will infer it.

Structure content in reasoning chains: claim → evidence → interpretation → implication. This sequential clarity helps AI engines parse your logic and cite you accurately. Implicit reasoning forces engines to infer connections, which increases error probability and reduces citation likelihood.

Practical change: Instead of writing “Customer data platforms improve marketing efficiency,” write “Customer data platforms improve marketing efficiency by unifying customer data from multiple sources (data aggregation), creating single customer views (identity resolution), and enabling precise audience segmentation (targeting optimization). This reduces wasted ad spend and increases conversion rates.” The explicit reasoning chain makes it trivial for AI engines to understand and cite the causal mechanism.

Step 8: Establish Semantic Feedback Loops

Monitor how AI engines actually traverse and cite your content. Run regular searches through ChatGPT, Perplexity, and Gemini for queries in your domain. Which of your pieces get cited? For what query patterns? When engines synthesize answers, which entity relationships do they extract from your content?

Use this feedback to refine your quantum semantic mesh. If engines consistently misinterpret certain entity relationships, add disambiguation. If they cite pieces together that you didn’t anticipate having semantic overlap, investigate what created that entanglement—you might discover new bridging opportunities.

Practical change: A B2B software company created a monthly “AI citation audit” where they tracked every instance of their content appearing in AI-generated answers. They mapped which semantic characteristics correlated with citation success, then systematically strengthened those characteristics across their content library. Over six months, their overall citation rate increased 280% as they tuned their semantic mesh based on real AI engine behavior.

Step 9: Reduce Semantic Noise and Redundancy

Eliminate content that doesn’t contribute unique semantic value. If five pieces all define the same entity without adding new relationship information, you’re creating semantic noise—multiple weak signals rather than one strong signal. Consolidate or differentiate.

Review your content library for semantic redundancy. Multiple pieces covering the same entity constellation from the same perspective create confusion for AI engines trying to determine which represents your authoritative position. Either merge redundant pieces into comprehensive authorities or differentiate them through distinct entity relationships and perspectives.

Practical change: A cybersecurity firm reduced their content library from 450 pieces to 280 by merging semantically redundant articles. Counter-intuitively, their AI engine visibility increased 170% because they eliminated competing signals. Instead of five mediocre articles all partially addressing “network threat detection,” they created one comprehensive semantic anchor that became the definitive source.

Step 10: Maintain Semantic Coherence Over Time

As you add new content, ensure it integrates into your existing quantum semantic mesh rather than fragmenting it. Before publishing, ask: which cluster does this belong to? What entities does it share with existing content? Does it introduce new entity definitions that contradict established ones? Does it create new semantic bridges or reinforce existing ones?

Create editorial guidelines that prioritize semantic coherence. When writers draft content, they should reference existing entity definitions rather than creating new ones. When content introduces new concepts, those concepts should be explicitly positioned relative to your existing entity hierarchy.

Practical change: A media company implemented “semantic coherence reviews” where editors verify that new articles maintain consistent entity definitions and relationships with existing content. Articles that introduce entity conflicts or definitional ambiguity get flagged for revision before publication. This prevents semantic fragmentation as the content library scales.

Recommended Tools

Building and maintaining quantum semantic mesh requires specific tooling for entity extraction, semantic analysis, and relationship mapping:

Obsidian

Note-taking and knowledge management tool with graph view that visualizes entity relationships. Use it to map your content’s semantic structure manually. Create notes for each core entity, link them based on relationships, and visualize the resulting network. Helps identify semantic orphans and cluster opportunities. The graph view is particularly useful for understanding topology.

Airtable

Database tool for tracking entity hierarchies and content-entity relationships. Build tables for: content pieces, entities, entity types, authority designations, cluster assignments. Create linked records between tables to map which entities appear in which content. Use filters and grouping to identify semantic gaps and redundancies. Airtable’s flexibility beats rigid spreadsheet structures for complex semantic mapping.

Semrush Writing Assistant

While primarily SEO-focused, the semantic analysis features help identify entity opportunities in your content. Shows related concepts and semantic keywords that engines expect to see alongside your core entities. Use it to ensure you’re including complete entity constellations rather than isolated concepts. The competitor analysis reveals semantic patterns in successful content.

Google Search Console

Essential for tracking which queries lead to your content. While you’re optimizing for AI engines, traditional search queries reveal user intent patterns that inform semantic positioning. Look for query patterns where you rank but don’t get clicks—these indicate semantic misalignment between query intent and your content’s meaning representation. Use Query Performance reports to identify opportunities to reduce semantic distance.

ChatGPT Plus / Claude Pro / Gemini Advanced

Use the AI engines themselves for testing semantic distance and citation probability. Run target queries, analyze what gets cited and why. Upload your content and ask the AI to map entity relationships and identify ambiguities. These tools are both your audience and your testing infrastructure. Note: using them for testing doesn’t require subscriptions, but paid tiers offer better models for detailed analysis.

Notion

Excellent for building and maintaining your entity authority hierarchy. Create database of entities with properties: definition, category (primary/secondary/tertiary), authoritative content pieces, related entities, disambiguation notes. Use linked databases to connect entities to content pieces. Notion’s flexibility lets you build custom views showing entity overlap between pieces.

Perplexity

Specifically useful for understanding source citation patterns. Run queries in your domain and analyze which sources Perplexity cites, what semantic characteristics those sources share, and how Perplexity synthesizes information across multiple sources. This reveals real-world semantic traversal patterns that you can replicate in your own content structure.

Google Analytics 4

Track user behavior patterns that reflect semantic coherence. Look at content flow—do users naturally move between semantically related pieces even without explicit navigation links? High flow between pieces suggests semantic entanglement that users (and AI engines) recognize. Low flow despite topical similarity suggests semantic distance problems.

RankMath or Yoast (WordPress)

SEO plugins that include schema markup generators. While quantum semantic mesh goes beyond traditional schema, proper structured data still helps AI engines parse entities and relationships. Use these tools to implement basic entity markup, then extend with custom schema for advanced semantic annotation.

Schema.org Validator

Test your structured data implementation to ensure AI engines can parse it correctly. The validator catches syntax errors that prevent semantic interpretation. While AI engines can understand content without perfect schema, clean structured data reduces friction in entity extraction and relationship mapping.

PageSpeed Insights

Semantic mesh means nothing if AI engines can’t access your content efficiently. Use PageSpeed to ensure technical performance doesn’t create barriers to discovery. AI engines may have different crawl budgets and performance tolerances than traditional search engines—optimize accordingly.

Implementation Comparison: Traditional vs. Quantum Approach

| Dimension | Traditional Linking | Quantum Semantic Mesh |

|---|---|---|

| Relationship Model | Fixed, explicit hyperlinks | Probabilistic semantic proximity |

| Navigation Logic | Predetermined pathways | Dynamic contextual traversal |

| Optimization Target | Human user flow | AI engine interpretation |

| Connection Basis | Manual editorial decisions | Entity constellation overlap |

| Scalability | Decreases with content volume | Increases with semantic density |

| Maintenance Burden | High—must update links manually | Lower—relationships emerge from content |

| Citation Predictability | Low—depends on many external factors | Higher—based on semantic distance |

| Cross-Topic Connection | Difficult—requires finding link opportunities | Natural—emerges from shared entities |

This isn’t about replacing traditional site architecture entirely. It’s about layering semantic relationships on top of (or alongside) existing structures, giving AI engines additional pathways through your content that match how they actually process information.

Advanced Framework: The Semantic Gravity Model

Think of entity authority as creating “semantic gravity wells” in your content space. More authoritative entities—those with comprehensive definitions, clear relationships, and extensive contextual usage—exert stronger gravitational pull. Content pieces orbit these authorities based on how many high-weight entities they share.

In this model, quantum semantic mesh architecture becomes about managing gravitational fields. You want multiple strong gravity wells (core entity authorities) with content orbiting at various distances based on semantic proximity. Related concepts orbit close, peripheral concepts orbit distant, and bridge pieces occupy Lagrange points where they’re influenced by multiple gravitational fields equally.

This framework helps visualize citation probability. AI engines are like objects moving through your semantic space. They’re pulled toward strong gravitational wells (authoritative entities) and follow trajectories determined by the combined gravitational influence of multiple entities. Content positioned in high-gravity regions gets encountered more frequently. Content in gravitational dead zones gets bypassed.

Implementing the gravity model:

Identify your gravitational centers: Which 5-8 entities are genuinely core to your domain? These should be concepts you could anchor entire knowledge ecosystems around. For a SaaS company, this might be entities like “cloud infrastructure,” “data security,” “API architecture,” “user authentication,” and “scalability patterns.”

Build maximum-gravity authorities: Create definitive content pieces for each gravitational center. These aren’t just definitions—they’re comprehensive treatments that establish entity meaning, map relationships to connected concepts, provide clear usage contexts, and offer evidence for claims. Think 3000-5000 words of pure semantic density around a single core entity.

Position content in orbital patterns: Arrange other content based on semantic distance from gravitational centers. Pieces sharing 3-4 entities with a center orbit close. Pieces sharing 1-2 entities orbit distant. Pieces sharing entities across multiple centers occupy bridge positions (Lagrange points) connecting gravitational fields.

Create gravitational pathways: AI engines traverse your space by moving along gravitational gradients—from high-gravity regions toward other high-gravity regions. Ensure these pathways exist through bridge content that shares entities across centers. If you have a content gap where two gravity wells have no gravitational pathway connecting them, AI engines can’t easily move from one topic domain to another.

Measure gravitational influence: For each piece of content, calculate its gravitational score—how strongly it’s pulled by various entity authorities based on shared entities and semantic proximity. Content with high gravitational influence from multiple centers becomes high-citation-probability content because it sits at the intersection of multiple meaning fields.

The beauty of this framework is its predictive power. You can estimate citation probability for new content before publishing by calculating where it will sit in your semantic gravity field. Content positioned in high-gravity, well-connected regions will naturally get more AI engine attention than content in gravitational peripheries.

Advantages and Limitations

The quantum semantic mesh approach delivers substantial advantages in AI-first discoverability, but it’s not without constraints and implementation challenges.

Primary advantage: you’re optimizing for how AI engines actually work rather than trying to game systems designed for different purposes. Traditional SEO optimizes for ranking algorithms that evaluate external signals (backlinks, domain authority, user engagement metrics). Quantum semantic mesh optimizes for interpretation systems that evaluate semantic signals (entity relationships, meaning proximity, contextual coherence). This fundamental alignment means your optimization efforts directly improve the specific factors AI engines use for citation decisions. When you reduce semantic distance or increase entity disambiguation, you’re making content genuinely more useful to AI systems—not just gaming metrics.

Second, the approach scales better than traditional link building. Each piece of content you add with proper semantic structure strengthens your entire mesh rather than requiring new linking campaigns. The value is multiplicative, not additive. Your 50th piece in a tight semantic cluster is dramatically more valuable than your first because it reinforces entity relationships and creates additional semantic pathways. Compare this to traditional SEO where acquiring the 50th backlink has diminishing marginal returns. The quantum mesh gets stronger as it grows denser, creating compound returns on content investment.

Third, semantic mesh architecture creates platform-independent value. You’re not optimizing for Google’s algorithm or Bing’s ranking factors—you’re optimizing for semantic principles that all AI interpretation systems must navigate. This means your optimization work remains relevant as new AI engines emerge and existing ones evolve. When the next generation of AI search engines launches, your content is already positioned for their semantic interpretation systems because you built for universal principles rather than platform-specific quirks.

Fourth, the citation stability is significantly higher. Once you establish strong semantic positioning—your content occupies a clear, well-defined region of conceptual space—that position is stable across algorithm updates and ranking fluctuations. AI engines might change how they traverse semantic space or weight different factors, but if your content has genuine semantic clarity and entity precision, it remains discoverable. Traditional rankings can collapse overnight from algorithm updates; semantic position is more resilient because it’s based on content fundamentals rather than external signals.

Now the limitations, which are non-trivial. Implementation requires significant upfront investment in content restructuring and semantic analysis. You can’t just sprinkle some entities into existing content and call it a quantum semantic mesh. You need to audit comprehensively, rebuild entity hierarchies, potentially merge or retire dozens of pieces, and train content teams on new approaches. For organizations with hundreds or thousands of existing content pieces, this is months of intensive work. The ROI is real but delayed—you won’t see citation probability increases until substantial mesh architecture is in place.

Second limitation: semantic coherence becomes increasingly difficult to maintain as organizations scale. When one person or a small team controls all content creation, maintaining consistent entity definitions and relationships is manageable. When ten different writers across multiple departments contribute content, semantic fragmentation becomes almost inevitable. Different people define concepts differently, use varying terminology, create incompatible entity relationships. This requires robust editorial processes and semantic governance that most organizations lack. The quantum mesh framework technically works at any scale, but organizational complexity undermines semantic coherence.

Third, measuring success is harder than traditional SEO. You can’t just check rankings or traffic numbers. You need to track citation rates across multiple AI engines, monitor which semantic pathways engines actually traverse, and analyze whether your semantic distance optimization is working. These metrics require custom tracking and analysis—there’s no “quantum semantic mesh analytics dashboard” you can buy. Most marketing teams aren’t equipped to measure semantic performance, which makes demonstrating ROI to stakeholders challenging.

Fourth, the framework assumes AI engines continue evolving toward semantic understanding rather than reverting to simpler pattern matching. This is a reasonable assumption given current trajectories, but it’s not guaranteed. If AI development hits unexpected limits or architectural paradigms shift, semantic mesh optimization might become less relevant. The risk is lower than with platform-specific optimization, but it exists. You’re betting on semantic principles remaining central to AI interpretation—a safe bet, but still a bet.

Fifth, quantum semantic mesh doesn’t solve the fundamental tension between human readability and machine optimization. Content that maximizes semantic density and entity clarity for AI engines can feel clinical and dry to human readers. Finding the sweet spot—high semantic value without sacrificing human engagement—requires skilled writing that many content teams struggle to produce. The framework works technically, but execution quality varies dramatically based on writer capability.

Final limitation: there’s a first-mover advantage that creates competitive moats. Organizations that implement quantum semantic mesh early in their domains can establish entity authority that’s difficult for later entrants to displace. If you’re entering a space where competitors already have strong semantic positioning, catching up requires either superior semantic density (harder to achieve) or finding entity gaps they haven’t claimed (increasingly rare in mature markets). The dynamics favor early adopters, which disadvantages latecomers in established domains.

These limitations aren’t fatal—they’re operational realities to navigate. Organizations that invest in proper implementation, maintain semantic governance, build measurement capabilities, and produce quality execution can overcome them. But pretending they don’t exist sets unrealistic expectations. Similar to how we discussed in understanding E-E-A-T in the age of generative AI, success requires acknowledging both opportunities and constraints rather than treating any framework as a magic solution.

The Future Evolution: Temporal Semantic Meshes

Current quantum semantic mesh implementations treat meaning as static—you establish entity definitions and relationships that persist until you manually update them. But meaning evolves over time. What “artificial intelligence” signified in 2015 differs substantially from its 2025 meaning, which will differ from 2030’s interpretation. The next evolution of semantic mesh architecture will incorporate temporal dimensions.

Temporal semantic meshes track how entity meanings shift across time and maintain multiple simultaneous meaning states that AI engines can reference based on query context. When someone asks “how did AI capabilities evolve?”, engines would traverse not just your current content’s semantic space but the historical evolution of your entity relationships. This creates four-dimensional content architecture where semantic proximity includes time as a variable.

Early implementations might be simple: content versioning that preserves historical entity definitions alongside current ones, allowing AI engines to cite your content for questions about both current state and historical evolution. More sophisticated versions could maintain continuous semantic evolution tracking where entity meanings shift gradually rather than in discrete versions.

The technical implementation gets complex. You’d need mechanisms to:

- Track entity definition changes over time with granular timestamps

- Maintain semantic relationship histories showing how entities connected differently in past states

- Create temporal bridging content that explicitly maps meaning evolution

- Signal to AI engines which temporal meaning state applies to which query contexts

But the value proposition is compelling. Temporal semantic meshes would let your content serve as authoritative sources for both current information and historical context, dramatically expanding citation opportunities. They would also create natural advantages against competitors who only maintain current-state semantic architectures.

Beyond temporal evolution, future quantum semantic mesh implementations will likely incorporate multi-modal semantic entanglement—connecting text, images, video, and structured data in unified meaning networks. An entity like “cloud infrastructure architecture” could have text definitions, architectural diagrams, video explanations, and structured specifications all semantically entangled so AI engines can cite the most appropriate format for each query context.

Imagine asking an AI assistant “show me how cloud infrastructure scales horizontally” and having it cite your text explanation for conceptual understanding, your architectural diagram for visual reference, and your structured specification data for technical precision—all pulled from semantically entangled resources rather than requiring you to package them together explicitly. That’s the next layer of quantum semantic mesh.

Conclusion

Quantum semantic mesh represents a fundamental shift from optimizing for predetermined pathways to optimizing for probabilistic discovery—matching how AI engines actually traverse and interpret information. By building content architecture around semantic relationships rather than traditional site structure, you create discovery pathways that emerge naturally from meaning proximity, eliminating the friction between how content is organized and how AI systems navigate it. The framework requires significant upfront investment and ongoing semantic governance, but delivers compound returns through citation probability that scales with semantic density. Organizations that implement quantum semantic mesh now establish positional advantages in AI-engine visibility that become increasingly difficult for competitors to displace.

For more, see: https://aiseofirst.com/prompt-engineering-ai-seo

FAQ

Q: What makes quantum semantic mesh different from traditional internal linking?

A: Quantum semantic mesh operates on probabilistic relationships rather than fixed connections. Traditional linking creates static pathways between pages—you link from A to B, users and crawlers follow those specific paths. The quantum approach models multiple simultaneous relationship states, allowing AI engines to navigate content through contextual relevance rather than predetermined links. When an AI engine processes a query, it doesn’t follow your site structure; it calculates semantic distance between the query and your content’s meaning representation in vector space. Quantum mesh optimizes for this actual navigation behavior rather than assuming engines follow links.

Q: How do AI search engines interpret semantic entanglement between content pieces?

A: AI engines detect semantic entanglement through vector space proximity and shared entity references. When two pieces of content share conceptual DNA—overlapping entities, parallel reasoning structures, complementary knowledge gaps—they become entangled in the AI’s interpretation layer. The engine doesn’t see them as separate documents connected by a link; it sees them as related meaning clusters in semantic space. This creates citation pathways that didn’t exist in your site architecture but emerge from the content’s semantic fingerprints. Entangled pieces get co-cited because the AI recognizes they’re mutually reinforcing sources for related queries.

Q: Can small sites implement quantum semantic mesh effectively?

A: Yes, but the approach differs from enterprise implementations. Small sites focus on depth over breadth—creating dense semantic clusters around 3-5 core topics rather than sprawling networks. Start with entity disambiguation within your niche, ensure consistent terminology across content, and build explicit conceptual bridges in your writing. The quantum effect emerges from semantic coherence, not volume. A 30-piece site with tight entity relationships and clear semantic structure can outperform a 500-piece site with fragmented, inconsistent meaning representation. Quality of semantic architecture matters more than quantity of content.

Q: What role does semantic distance play in AI citation probability?

A: Semantic distance directly influences whether an AI engine surfaces your content as a source. Engines calculate the conceptual gap between a query’s meaning representation and your content’s position in semantic space. Shorter semantic distance—achieved through precise entity matching, clear definitional structures, and contextual alignment—increases citation probability. It’s not about keyword proximity but meaning proximity in vector space. If your content discusses “sustainable packaging solutions” and a query asks about “eco-friendly food delivery containers,” the semantic distance determines whether the engine considers your content relevant despite different surface-level terminology.

Q: How will quantum semantic mesh evolve as AI models advance?

A: Future iterations will likely incorporate temporal semantic evolution—tracking how meanings shift across time—and multi-modal semantic entanglement connecting text, images, and structured data in unified meaning networks. We’ll see emergence of semantic reputation scores where content’s citation history influences its future discoverability, creating compound advantages for established authorities. The mesh becomes self-reinforcing as AI systems learn which semantic patterns generate reliable answers. Advanced implementations might include adaptive semantic positioning where content automatically adjusts entity emphasis based on query trend analysis, or predictive semantic clustering that anticipates future entity relationships before they become mainstream.