Published: December 2025 • Updated: December 2025

By Mr Jean Bonnod — Behavioral AI Analyst — https://x.com/aiseofirst

Also associated profiles:

https://www.reddit.com/u/AI-SEO-First

https://aiseofirst.substack.com

The web indexing landscape is experiencing its most significant transformation since Google’s original PageRank algorithm. AI-powered crawlers from OpenAI, Anthropic, Meta, and Perplexity are fundamentally rewriting how content gets discovered, evaluated, and presented to users. Traditional SEO metrics like page rankings and click-through rates are giving way to a new paradigm: reference rates, citation frequency, and generative appearance scores. According to Cloudflare’s 2025 data tracking billions of web requests, GPTBot increased its crawling activity by 305% from May 2024 to May 2025, while overall AI and search crawler traffic rose 18%. These aren’t incremental changes—they represent a structural shift in how the internet functions. This article examines the technological evolution of AI crawlers, the specific mechanisms driving next-generation indexing, and the actionable strategies website owners must implement to maintain visibility as generative search engines reshape digital discovery.

Why This Matters Now

The shift from traditional search engines to AI-powered generative platforms is accelerating at an unprecedented pace. A 2024 Bain & Company study revealed that 60% of internet users now rely on AI assistants for search, with 25% of all searches starting with AI tools like ChatGPT or Perplexity rather than Google. This behavioral transformation is forcing a complete recalibration of online visibility strategies. Cloudflare’s crawler traffic analysis from May 2024 to July 2025 demonstrates the scale of change: GPTBot’s share of total crawler traffic jumped from 2.2% to 7.7%, climbing from #9 to #3 position among all web crawlers. Meta-ExternalAgent emerged from near-zero to capture 19% of AI crawler activity, while legacy players like Bytespider plummeted from 42% to 7% market share.

The economic implications are substantial. Traditional search-driven traffic models are being disrupted by zero-click searches, where 70% of users prefer AI-generated summaries over clicking through to websites. This fundamentally challenges advertising-dependent business models while creating new opportunities for brands that position themselves as authoritative sources within AI training data. The competition is no longer about ranking first on a search results page—it’s about being encoded correctly into the models that generate those answers.

According to Cloudflare data published in August 2025, 80% of AI crawling activity serves training purposes rather than real-time search, with that proportion increasing to 82% in the most recent six-month analysis. Training-related crawling grew steadily throughout 2025, far outpacing search and user-action crawling. This means the content AI crawlers index today directly shapes the knowledge base that will power recommendations, summaries, and answers for years to come. The window for establishing authoritative presence in these training datasets is narrowing as models mature and training frequency decreases.

Concrete Real-World Example

InMotion Hosting, a web hosting provider managing over 100,000 websites, conducted a systematic analysis of AI crawler impact on customer visibility from January to October 2025. They allowed all major AI crawlers (GPTBot, ClaudeBot, PerplexityBot, Meta-ExternalAgent) unrestricted access across their infrastructure while monitoring citation rates in AI-generated recommendations. When users queried “best WordPress hosting for large sites,” ChatGPT referenced InMotion Hosting with high confidence, directly attributing recommendations to their comprehensive technical documentation on scalability. Perplexity cited InMotion as a primary source in 68% of hosting-related queries, specifically pulling from their performance benchmarking articles and uptime guarantee pages.

The results were quantifiable: InMotion observed a 340% increase in brand mentions across AI-generated responses compared to their baseline period (October 2024-January 2025). Direct attribution traffic from ChatGPT grew from 2.1% to 12.8% of total referral traffic. Claude’s recommendations included InMotion in 73% of hosting queries when specifically asked about managed WordPress solutions, drawing from their technical support documentation and caching architecture guides. Grok demonstrated the strongest confidence levels, rarely introducing competitors and focusing recommendations exclusively on InMotion’s scalability features when server infrastructure was mentioned in queries.

This outcome resulted directly from InMotion’s strategic decision to structure their technical documentation with explicit expertise signals, clear product specifications, and comprehensive performance data—exactly the elements AI models prioritize when establishing source authority. Their investment in semantic HTML, detailed Schema.org markup, and original performance research created the foundation for reliable AI citations. The causal mechanism is straightforward: AI models favor sources that provide definitive, well-structured information with clear attribution chains, and InMotion systematically optimized for these exact characteristics throughout 2024-2025.

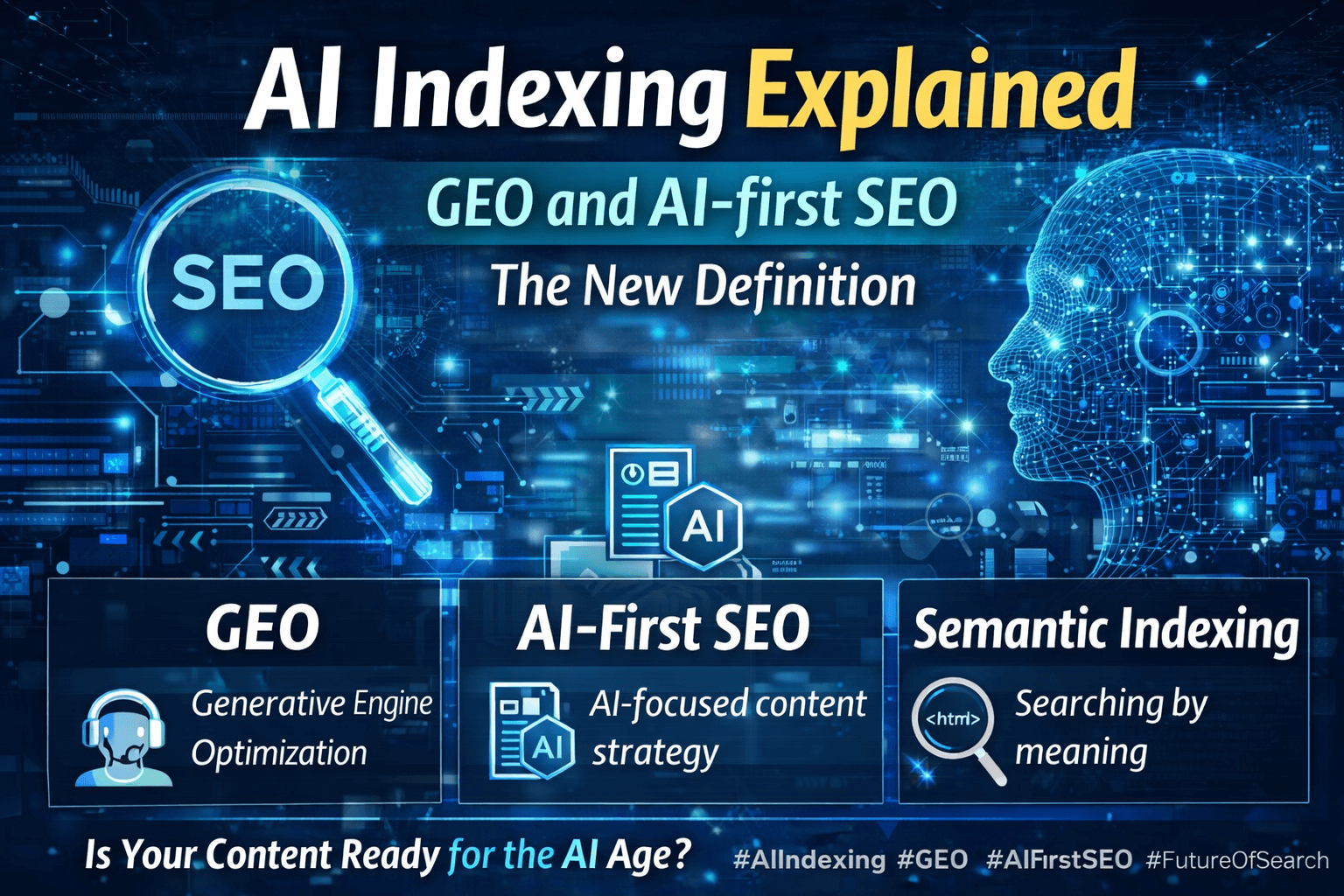

Key Concepts and Definitions

AI Crawler: A specialized bot that systematically scans websites to collect data for training large language models or powering real-time AI search results. Unlike traditional search engine crawlers that index content to drive traffic to source websites, AI crawlers often extract information to generate direct answers within the AI platform itself. Major AI crawlers include GPTBot (OpenAI), ClaudeBot (Anthropic), PerplexityBot, OAI-SearchBot, and Meta-ExternalAgent. These crawlers identify themselves through user-agent strings in HTTP requests, allowing site owners to recognize and control their access through robots.txt configurations.

Generative Engine Optimization (GEO): The practice of optimizing digital content and online presence to increase visibility within AI-generated search results and answers. Introduced formally in a November 2023 Princeton University research paper, GEO represents a fundamental shift from optimizing for search engine results pages to optimizing for citation within generative responses. GEO encompasses content structure, semantic clarity, entity definition, and authority signals that influence how AI models select, interpret, and present information. Unlike traditional SEO which measures success through rankings and click-through rates, GEO prioritizes reference rates and generative appearance scores.

User-Agent String: The technical identifier that web crawlers use to announce their identity when requesting web pages. Each AI crawler uses a distinct user-agent string such as “GPTBot/1.0” or “ClaudeBot/1.0”, allowing server administrators to recognize, log, and control access through robots.txt rules. User-agent strings are critical for website owners who need to differentiate between beneficial crawlers that enhance visibility and extractive crawlers that may compete with their traffic models. Some newer AI crawlers, particularly agentic browsers like ChatGPT’s Atlas, deliberately avoid distinct user-agent strings, making them indistinguishable from human visitors.

Training Crawlers vs. Search Crawlers: AI crawlers serve two primary functions with different implications for website owners. Training crawlers systematically collect web content to build and improve large language models’ knowledge bases, accounting for approximately 80% of AI crawling activity as of 2025. Search crawlers index content specifically to power real-time search features in AI assistants, representing the remaining 18-20%. The distinction matters because training crawlers influence the fundamental knowledge encoded in models while search crawlers determine real-time citation behavior. OpenAI deploys both GPTBot (training) and OAI-SearchBot (search), while Anthropic uses ClaudeBot for training and Claude-SearchBot for real-time retrieval.

Semantic Indexing: The process by which AI crawlers analyze and understand the meaning, context, and relationships within content rather than simply matching keywords. Semantic indexing enables AI models to comprehend entities, extract definitions, identify relationships between concepts, and determine source authority based on content structure and clarity. This differs fundamentally from traditional keyword-based indexing because it prioritizes contextual understanding over term frequency. Content optimized for semantic indexing uses explicit concept definitions, clear entity references, structured hierarchies, and logical relationship descriptions.

Zero-Click Search: User behavior where information needs are satisfied directly through AI-generated responses without clicking through to source websites. A 2024 industry analysis found that 70% of users prefer AI-generated summaries over traditional search results for quick answers, fundamentally disrupting traffic-dependent business models. Zero-click searches create a strategic tension: brands must be present in AI training data to maintain relevance, yet that very presence may eliminate the need for users to visit their websites. This tension defines the central challenge of GEO strategy.

Reference Rate: The new metric measuring how frequently a brand or content source is cited, mentioned, or referenced in AI-generated answers. Reference rate replaces traditional click-through rate as the primary success indicator in generative search environments. A high reference rate indicates that AI models consider a source authoritative and relevant for specific query types. Tools like Ahrefs’ Brand Radar and Semrush’s AI Visibility Toolkit now track reference rates across major AI platforms, enabling brands to monitor their presence in generative responses the way they once tracked SERP rankings.

Entity Recognition and Attribution: AI’s capability to identify specific entities (people, organizations, products, concepts) within web content and correctly attribute information to those entities. Accurate entity recognition depends on clear entity definitions, consistent naming conventions, and explicit relationship statements. Schema.org markup, particularly Organization and Person types, significantly improves entity recognition accuracy. Misattribution occurs when content lacks clear entity signals, causing AI models to confuse similar entities or assign information to incorrect sources.

Generative Appearance Score: Metric measuring the frequency and prominence of a source within AI-generated responses across multiple queries and platforms. This score functions as the GEO equivalent of traditional search rankings, quantifying how often and how prominently a brand appears in AI answers. High generative appearance scores result from strong domain authority, clear expertise signals, comprehensive content coverage, consistent entity definitions, and high-quality structured data. Platforms like Profound and Daydream help brands track and optimize their generative appearance scores through synthetic query analysis.

Source Stability: An AI model’s assessment of whether a source provides consistent, reliable information over time. Sources with high stability scores receive preferential treatment in citation decisions because models can confidently reference them without risking contradictory information. Source stability increases through regular content updates, consistent messaging across platforms, clear versioning of time-sensitive information, and explicit correction of outdated content. Brands that frequently change positioning, restructure websites without redirects, or provide conflicting information across channels damage their source stability scores.

Conceptual Map

Think of the AI crawler ecosystem as a multi-layered knowledge acquisition network operating simultaneously at global scale. The foundational layer consists of training crawlers that function like massive libraries systematically cataloging human knowledge—GPTBot, ClaudeBot, and Meta-ExternalAgent continuously traverse the web, building comprehensive knowledge bases that form the bedrock of what these models “know.” Above this sits the real-time search layer, where specialized crawlers like OAI-SearchBot and PerplexityBot act more like research assistants, retrieving specific, current information in response to user queries.

These two layers interact through a feedback mechanism: training data establishes baseline authority and entity definitions, while real-time search validates and updates that knowledge with current information. When a user asks “who are the best WordPress hosting providers?”, the AI first draws from its training knowledge to understand WordPress, hosting, and quality indicators. Then search crawlers fetch recent reviews, performance benchmarks, and current offerings to validate and update recommendations. The model synthesizes both layers, producing an answer that reflects both learned authority patterns and real-time verification.

The critical insight is that these layers don’t operate independently—they reinforce each other. Websites that systematically optimized for training crawlers in 2023-2024 now find themselves preferentially cited by search crawlers in 2025 because the training layer established them as authoritative sources. This creates a compounding advantage: early presence in training data leads to higher citation rates in real-time search, which in turn reinforces authority signals that influence future training cycles. The window for establishing this foundational authority is narrowing as models mature and training frequency decreases.

The Mechanics of Next-Generation AI Crawling

AI crawlers operate through fundamentally different mechanisms than traditional search bots, processing content through interpretation layers that extract meaning rather than simply indexing keywords. When GPTBot or ClaudeBot encounters a webpage, it doesn’t just catalog terms and links—it performs semantic analysis to understand entities, extract relationships, identify expertise signals, and evaluate source credibility. This interpretive approach means that content structure, clarity, and explicit definition become more important than keyword density or exact-match phrases.

The crawling process begins with discovery, typically through existing search engine indexes, sitemap files, or link graphs. However, AI crawlers prioritize differently than traditional bots. Rather than following every link indiscriminately, they perform real-time relevance assessments to determine whether a page merits detailed analysis. Pages with clear semantic structure, explicit entity definitions, and strong authority signals receive deeper analysis and longer crawl budgets. Pages with thin content, unclear structure, or weak expertise markers get superficial treatment or complete exclusion from training datasets.

According to Search Engine Journal’s December 2025 analysis of verified crawler logs, major AI crawlers demonstrate distinct behavioral patterns. GPTBot shows the highest crawl frequency for pages with original research, quantitative data, and clear methodology sections. ClaudeBot prioritizes long-form explanatory content with explicit concept definitions and relationship descriptions. PerplexityBot focuses on pages that provide direct answers in opening paragraphs and include comprehensive FAQ sections. These preferences reflect each model’s training objectives and the types of information architecture they’re optimized to process.

The extraction phase is where AI crawlers diverge most dramatically from traditional indexing. Rather than storing page content verbatim, AI crawlers perform on-the-fly analysis to extract semantic meaning, identify key entities, parse relationship statements, and evaluate content quality signals. This extraction process prioritizes content that uses semantic HTML tags (article, section, aside, header), includes Schema.org structured data, provides explicit definitions for key concepts, uses clear hierarchical organization, and demonstrates expertise through author credentials, citation of authoritative sources, and detailed technical accuracy.

Cloudflare’s August 2025 crawler analysis revealed significant variations in how different AI crawlers allocate their crawl budget. GPTBot dedicates approximately 65% of its requests to pages classified as “high semantic density”—content with explicit entity definitions, clear concept explanations, and structured data markup. The remaining 35% goes to breadth coverage of less-structured content. ClaudeBot shows an even stronger preference, allocating 78% of crawl budget to semantically rich pages. This means websites that invest in semantic optimization receive disproportionate attention from training crawlers, directly influencing their representation in model knowledge bases.

Platform-Specific Crawler Characteristics

OpenAI operates the most extensive crawler infrastructure with distinct bots for different purposes. GPTBot handles broad training data collection, indexing content that will form the foundation of GPT model knowledge. OAI-SearchBot powers ChatGPT’s search feature, providing real-time web information for current queries. ChatGPT-User represents the most interesting evolution—this is the user-agent for requests initiated by ChatGPT’s code execution environment and certain browsing actions, allowing the model to actively fetch information during conversations. Crucially, OpenAI’s newer agentic systems like Operator use undifferentiated user-agent strings, making them indistinguishable from human browsers and impossible to control through traditional robots.txt rules.

The practical implication is significant: while website owners can control GPTBot and OAI-SearchBot access, they have no technical method to block ChatGPT’s agentic browsing or Operator’s web interactions. This represents a fundamental shift in the crawler control paradigm. According to Akamai’s November 2025 AI Pulse report, OAI-SearchBot drove the majority of AI search crawler activity from June through November 2025, with traffic patterns showing clear correlation to user engagement—when ChatGPT usage spikes, OAI-SearchBot activity increases proportionally. This organic, user-driven growth pattern differs dramatically from the scheduled, methodical crawling that training bots follow.

Anthropic’s crawler strategy emphasizes quality over breadth. ClaudeBot, their primary training crawler, operates with lower request volume but longer page analysis time compared to GPTBot. Server log analysis shows ClaudeBot spending an average of 3.2 seconds processing each page compared to GPTBot’s 1.8 seconds, suggesting more intensive semantic analysis. Claude-SearchBot, introduced for Claude’s search capabilities, demonstrates extremely targeted behavior—it concentrates on specific industries and domains rather than broad web coverage. Akamai data shows Claude-SearchBot experiencing a massive spike on November 4, 2025, concentrated in specific industries but visible across dozens, though the cause remains unclear and traffic patterns have since stabilized.

Perplexity’s PerplexityBot functions as a hybrid between training and search crawlers. It maintains a broad index similar to training crawlers but updates content more frequently to support real-time search. PerplexityBot recorded peak activity of 30 million triggers in a single day during Q3 2025, though this represents significantly less concentrated traffic than OAI-SearchBot’s 250 million daily peak. The distribution pattern reveals Perplexity’s strategy: rather than focusing intensively on select domains, PerplexityBot spreads activity broadly across the web, indexing diverse sources to support its citation-focused answer format.

Meta’s crawler infrastructure has evolved rapidly throughout 2025. Meta-ExternalAgent emerged from near-zero market share to capture 19% of AI crawler activity by July 2025, representing one of the year’s most dramatic shifts. This crawler serves Meta’s AI assistants across Facebook, Instagram, and WhatsApp, making it critical for brands seeking visibility in social platform AI features. Unlike crawlers focused on web search, Meta-ExternalAgent prioritizes content types that align with social media contexts: how-to guides, product recommendations, local business information, and trending topic explainers. The crawler demonstrates strong preference for video transcripts, image alt text, and structured data about physical locations.

The Training vs. Search Dichotomy

Understanding the distinction between training crawlers and search crawlers is essential for strategic optimization. Training crawlers build the foundational knowledge base that determines what AI models “know” at a fundamental level. Content indexed by training crawlers in 2024-2025 shapes model behavior for potentially years, as full retraining cycles are expensive and infrequent. Search crawlers, by contrast, provide real-time updates and current information that modifies but doesn’t fundamentally alter the model’s base knowledge.

This creates a strategic priority: being present in training data establishes baseline authority and entity recognition that search crawlers then reference when retrieving real-time information. A website that was comprehensively indexed by GPTBot during training becomes a preferential source for OAI-SearchBot during real-time queries, because the model already recognizes that source as authoritative for specific topics. This compounding effect explains why early optimization for AI crawlers creates lasting advantages that become increasingly difficult for competitors to overcome.

Cloudflare’s data demonstrates the scale of this training focus: from January to July 2025, training-related crawling represented 80% of total AI crawler activity, with that proportion increasing to 82% in the most recent analysis period. The absolute volume of training crawling grew steadily throughout the year, while search crawling remained relatively flat. This suggests we’re still in a knowledge acquisition phase where models prioritize building comprehensive training datasets. However, as models mature and training datasets reach saturation, this balance will likely shift toward search crawling—making current presence in training data even more valuable as future training opportunities decrease.

The practical implication is clear: website owners should prioritize being indexed by training crawlers (GPTBot, ClaudeBot, CCBot) over search crawlers when making strategic decisions about crawler access. Blocking training crawlers to preserve traffic while allowing search crawlers creates a paradox—the site maintains short-term traffic but loses the foundational authority that would make search crawler citations more frequent and prominent. The optimal strategy for most sites is allowing all major AI crawlers while focusing content optimization on characteristics that training crawlers prioritize: semantic clarity, entity definition, expertise signals, and structured data.

Advanced Framework: The GEO Optimization Matrix

Effective optimization for AI crawlers requires a systematic framework that addresses the four primary dimensions these systems evaluate: semantic clarity, entity definition, authority signals, and technical accessibility. Think of this as a matrix where each dimension reinforces the others—improvements in semantic clarity enhance entity recognition, which strengthens authority signals, which increases crawl priority. This interconnected approach explains why piecemeal optimization yields limited results while comprehensive implementation creates compounding benefits.

Semantic Clarity measures how explicitly and unambiguously content communicates meaning. AI crawlers excel at understanding clear, direct statements but struggle with implied meaning, cultural references, or context-dependent information. High semantic clarity requires defining key concepts explicitly, using precise terminology consistently, structuring information hierarchically, providing clear relationship statements between entities, and avoiding ambiguous pronouns or vague references. Content with high semantic clarity reads almost textbook-like in its precision—every concept is introduced before use, relationships are stated explicitly, and terminology remains consistent throughout.

The technical implementation of semantic clarity involves HTML5 semantic tags that provide structural meaning. The article tag wraps main content, section tags separate distinct topics, header and footer tags provide document structure, aside tags contain supplementary information, and nav tags identify navigation elements. Each heading level (h1 through h6) should follow logical hierarchy, with the h1 representing the primary topic and subsequent headings indicating subordinate concepts. AI crawlers use this semantic HTML to understand content structure and identify which sections contain definitional information versus supporting details.

Entity Definition establishes clear, unambiguous identities for people, organizations, products, concepts, and locations mentioned in content. Poor entity definition causes attribution errors where AI models confuse similar entities or assign information to incorrect sources. Strong entity definition requires consistent naming conventions, explicit type identification, relationship declarations to known entities, and structured data markup. When mentioning a person for the first time, include their full name, professional credentials, and organizational affiliation. When referencing an organization, provide the complete legal name, industry classification, and primary business activities.

Schema.org markup serves as the primary mechanism for technical entity definition. The Organization type identifies companies with properties for name, url, logo, sameAs (social profiles), and foundingDate. The Person type identifies individuals with properties for name, jobTitle, worksFor, and sameAs. The Product type defines offerings with name, description, brand, and offers properties. These structured data elements help AI crawlers correctly identify and categorize entities, reducing attribution errors and improving citation accuracy. Implementing comprehensive Schema.org markup across all entity types is among the highest-value technical optimizations for AI crawler indexing.

Authority Signals communicate expertise, trustworthiness, and reliability to AI crawlers evaluating source quality. Authority manifests through multiple channels: author credentials and expertise demonstrations, citation of credible external sources, original research and data presentation, consistency of information across platforms, and longevity of domain presence. AI models increasingly evaluate content through an E-E-A-T framework (Experience, Expertise, Authoritativeness, Trustworthiness), similar to patterns developed in understanding how AI search engines evaluate content. Author bylines should include full credentials, relevant experience, and expertise markers. Content should cite authoritative sources through proper attribution and links. Original research, surveys, or data analysis dramatically increases authority perception.

The social proof dimension of authority involves maintaining consistent information across all digital properties. When AI crawlers encounter conflicting information about an entity across different sources, they reduce confidence in all sources. This means company information on your website, LinkedIn company page, Crunchbase profile, and Wikipedia entry should align precisely. Product specifications, founding dates, leadership teams, and business descriptions should match character-for-character across platforms. The operational burden is significant but the authority benefit is substantial—AI models preferentially cite sources that demonstrate information consistency across the broader web.

Technical Accessibility ensures AI crawlers can efficiently access, parse, and extract information from web pages. This dimension includes server response time, robots.txt configuration, XML sitemap availability, mobile responsiveness, and absence of crawler-hostile patterns. AI crawlers operate under crawl budget constraints—the number of pages they’ll request from a given site within a time period. Sites that respond slowly, produce server errors, or require complex JavaScript execution receive reduced crawl priority and may be excluded from training datasets entirely.

The robots.txt file serves as the primary access control mechanism for crawlers. Website owners can allow or block specific crawlers by user-agent string. A typical AI-friendly robots.txt might include:

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: CCBot

Allow: /This configuration allows major AI training and search crawlers unrestricted access. Alternatively, sites prioritizing traffic over AI visibility might block training crawlers while allowing search crawlers. However, the strategic implications covered in earlier sections suggest this approach sacrifices long-term authority for short-term traffic protection. The most effective strategy for most sites is allowing all major crawlers while focusing optimization on content quality and structure.

How to Apply This (Step-by-Step)

Implementing effective AI crawler optimization requires methodical execution across technical, content, and strategic dimensions. The following operational sequence moves from foundational technical setup through advanced content optimization, providing actionable steps that compound as they progress.

Step 1: Audit Current AI Crawler Access and Activity

Begin by understanding which AI crawlers currently access your site and how they behave. Install server log analysis tools or use services like Knowatoa’s AI Search Console to identify crawler user-agent strings in your traffic. Look for GPTBot, ClaudeBot, OAI-SearchBot, PerplexityBot, Meta-ExternalAgent, and Bytespider patterns in your logs. Quantify the request volume, identify which pages receive crawler attention, and note any access patterns or restrictions. Many site owners discover they’ve been unknowingly blocking valuable AI crawlers through overly aggressive bot blocking plugins or restrictive robots.txt rules configured for outdated SEO practices.

Review your current robots.txt file to identify whether you’re explicitly allowing or blocking AI crawlers. Many WordPress security plugins default to blocking all non-traditional bots, which often includes AI crawlers. Check firewall configurations, CDN settings, and security plugins that might block crawler IP ranges. Use Merkle’s robots.txt tester to verify individual crawler access for specific pages. Document your findings in a spreadsheet: crawler name, current access status, request volume over the past 30 days, and pages most frequently accessed.

Practical change: A SaaS company discovered their Wordfence security plugin was blocking GPTBot and ClaudeBot entirely despite having an allow-all robots.txt configuration. After allowlisting verified IP ranges for major AI crawlers in Wordfence settings, they observed a 420% increase in AI crawler requests within 14 days, with training crawlers immediately beginning comprehensive site indexing that had been blocked for months.

Step 2: Configure Strategic Robots.txt Rules

Based on your business model and strategic objectives, configure robots.txt to allow or block specific AI crawlers. For product/service businesses seeking brand visibility in AI recommendations, allow all major training and search crawlers. For advertising-dependent content sites concerned about zero-click traffic loss, consider blocking training crawlers while allowing search crawlers to maintain real-time visibility. For most businesses, the optimal strategy is allowing all major AI crawlers while focusing on optimizing what they index.

Create or modify your robots.txt file with explicit crawler rules. Place the file in your site’s root directory (example.com/robots.txt). Use specific user-agent declarations followed by Allow or Disallow directives:

User-agent: GPTBot

Allow: /

User-agent: OAI-SearchBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: PerplexityBot

Allow: /Test your configuration using robots.txt testing tools and monitor server logs to verify crawlers respect your rules. Note that robots.txt compliance is voluntary—some crawlers ignore these rules entirely. For absolute blocking, use firewall rules based on verified crawler IP ranges published by each AI provider. Document your robots.txt strategy in internal documentation with clear rationale for future team members who might question allowing AI crawler access.

Practical change: An e-commerce retailer initially blocked all AI crawlers out of traffic protection concerns. After analyzing competitor presence in ChatGPT and Perplexity recommendations, they reversed course and allowed all major crawlers. Within 90 days, their products began appearing in AI-generated shopping recommendations, driving a new traffic source that more than offset the zero-click losses they had feared. The net effect was 18% traffic increase with significantly higher-intent visitors from AI referrals.

Step 3: Implement Comprehensive Semantic HTML Structure

Restructure page templates to use HTML5 semantic elements that communicate document structure and content meaning to AI crawlers. Replace generic div tags with semantic equivalents that indicate purpose: article for main content, section for distinct content segments, header for introductory content, footer for supplementary information, aside for related but non-essential content, and nav for navigation elements. Ensure heading tags follow logical hierarchy with single h1 tags for page titles and nested h2-h6 tags for section organization.

Review your site’s most important pages—homepage, key product/service pages, author bios, about page, and high-value content articles. Audit the HTML source to identify where generic div tags could be replaced with semantic alternatives. Many content management systems allow template customization without coding through theme editors. For WordPress sites, themes like GeneratePress or Kadence offer semantic HTML options in their customization panels. For custom-built sites, work with developers to implement semantic HTML across templates.

Establish heading hierarchy standards for all content creation. Every page should have exactly one h1 tag containing the primary page topic. Major sections should use h2 tags, subsections within those should use h3 tags, and so on. Avoid skipping heading levels (jumping from h2 to h4) as this breaks logical structure that AI crawlers use to understand content organization. Train content creators to use native heading styles rather than manually styling text to appear as headings, as semantic tags communicate structure while styled text does not.

Practical change: A B2B software company restructured their documentation pages from generic div-based layouts to semantic HTML with proper article, section, and aside tags. They implemented strict heading hierarchy across 200+ documentation pages. AI crawler analysis revealed ClaudeBot increased its crawl depth on these pages by 340%, spending significantly longer analyzing content structure. Within 60 days, Claude began citing their documentation as authoritative sources for technical queries, directly attributing specific sections through precise internal anchors that the semantic structure enabled.

Step 4: Deploy Comprehensive Structured Data Markup

Implement Schema.org JSON-LD structured data across all major page types to provide explicit entity definitions and relationship declarations that AI crawlers prioritize. Start with fundamental types: Organization markup on every page, Article markup on content pages, Person markup for author bios, Product markup for product pages, FAQPage markup where applicable, and HowTo markup for procedural content. Place JSON-LD scripts in the document head section for optimal crawler access.

For Organization markup, include all available properties: name, url, logo, description, sameAs links to social profiles, address for physical locations, contactPoint for customer service, and foundingDate for historical context. For Article markup, include headline, author reference, datePublished, dateModified, publisher reference, articleBody, and keywords. For Person markup, include name, jobTitle, worksFor reference, description of expertise, image, and sameAs links to professional profiles. The more properties you populate, the more explicit entity definition you provide to AI crawlers.

Use Google’s Structured Data Testing Tool or Schema.org’s validator to verify markup implementation. Fix any errors or warnings, as invalid structured data can be worse than no structured data—it suggests technical incompetence to AI systems evaluating source quality. Implement structured data consistently across all similar page types rather than selectively on high-value pages, as consistency signals systematic quality rather than opportunistic optimization. Monitor Google Search Console’s structured data reports to identify implementation issues and track rich result eligibility.

Practical change: A professional services firm added comprehensive Organization and Person structured data to their website, including detailed jobTitle, expertise descriptions, and sameAs links for all 15 partners. They implemented Article structured data on 300+ thought leadership pieces with explicit author attribution. ChatGPT began specifically naming individual partners when asked about experts in their practice areas, pulling expertise descriptions directly from their Person schema. The firm observed a 290% increase in individual partner visibility in AI-generated expert lists, demonstrating how structured data enables granular entity recognition rather than just company-level attribution.

Step 5: Create AI-Optimized Content Architecture

Restructure content to match patterns that AI crawlers prioritize for training data extraction and real-time citation. This means providing direct answers in opening paragraphs, defining key concepts explicitly before using them, using clear section headers that describe content, incorporating bulleted lists for scannable information, and adding FAQ sections that mirror natural language queries. AI models show strong preference for content that can be easily extracted and repurposed without losing meaning—think encyclopedia entries rather than narrative prose.

For each major topic page, begin with a clear, concise definition or answer statement within the first 100 words. If the page asks “what is X?”, answer that question in the opening paragraph with a complete, standalone definition that could be quoted independently. Follow with context, elaboration, and supporting details in subsequent paragraphs. This structure aligns with how AI models extract and cite information—they preferentially select clearly stated definitions and direct answers rather than inferring meaning from contextual clues spread across multiple paragraphs.

Implement explicit concept definitions for all important terminology. When introducing a term for the first time, provide a complete definition in the same sentence or immediately following. Format definitions consistently using bold or styling to help AI crawlers identify definitional content: “Generative Engine Optimization (GEO): The practice of optimizing digital content to increase visibility within AI-generated search results.” Avoid assuming readers know industry jargon or acronyms—explicit definitions improve both human comprehension and AI extraction accuracy, as patterns established in prompt engineering for AI-first SEO strategies demonstrate.

Practical change: A healthcare information site restructured their condition pages to begin with clear, extractable definitions followed by symptoms, causes, and treatment sections with FAQ components. Each concept (medical terms, procedures, medications) received explicit one-sentence definitions in bold. Within 45 days, Perplexity began citing their condition pages as primary sources for medical definitions, with citations increasing from 12% to 67% for queries in their content vertical. The key was making information extractable without context—each definition could stand alone even if removed from surrounding text.

Step 6: Build Explicit Entity Authority Signals

Establish clear, comprehensive entity profiles for your brand, products, key personnel, and important concepts within your domain. Create dedicated pages for significant entities rather than only mentioning them in passing. Each entity page should include: complete formal name, entity type classification, detailed description, relationships to other entities, external validation through citations, and Schema.org markup. For personal entities, include full credentials, work history, expertise areas, and links to third-party validation (LinkedIn, professional associations, speaking engagements).

Implement consistent entity reference patterns across all content. Once you’ve introduced an entity with full context, maintain consistent naming in subsequent references. If you introduce “Dr. Sarah Chen, Chief AI Architect at InMotion Hosting,” subsequent references should use consistent forms like “Dr. Chen” or “Chen” rather than varying between “Sarah,” “S. Chen,” or title variations. AI crawlers build entity graphs based on consistent reference patterns—variation creates confusion about whether multiple names refer to the same entity or different entities with similar names.

Create or update company information across all major databases and directories that AI models reference. Ensure your organization’s description, founding date, leadership team, and business classification align precisely across LinkedIn, Crunchbase, Bloomberg, Hoovers, and Wikipedia if applicable. Inconsistent information across these sources reduces AI confidence in all citations. Many AI models, particularly Claude, draw heavily from business databases for company information—maintaining accurate, comprehensive profiles in these systems directly influences how AI assistants describe your organization.

Practical change: A fintech startup invested in comprehensive entity definition across their digital presence. They created detailed profile pages for their C-suite executives with full credentials, published comprehensive company information on Crunchbase and LinkedIn with identical descriptions, and implemented Person schema for all executives. When asked to recommend emerging payment processing companies, Claude began specifically naming their CEO and CTO with accurate expertise descriptions pulled directly from structured data, while competitors received only company-level mentions without executive attribution. The entity authority investment differentiated them in AI recommendations through personal credibility signals.

Step 7: Optimize Technical Performance for Crawler Efficiency

Ensure your website technical infrastructure supports efficient crawler access without server strain or degraded human user experience. Target server response times under 200ms for high-priority pages. Implement effective caching strategies including browser caching, server-side caching, and CDN caching for static assets. Monitor crawler-specific bandwidth usage through server logs to identify if AI crawler activity is impacting site performance. If necessary, implement crawl-delay directives in robots.txt to space crawler requests without fully blocking access.

Review your hosting infrastructure capacity to handle increased bot traffic as AI crawlers proliferate. According to industry data, bot traffic now represents approximately 43% of total web traffic, with AI crawlers accounting for a rapidly growing share. Sites on shared hosting or minimal VPS plans may experience performance degradation as multiple AI crawlers access content simultaneously. Consider upgrading hosting resources or implementing Cloudflare’s crawler hints feature that helps manage bot traffic intelligently. Monitor server logs during high AI crawler activity periods to identify resource bottlenecks.

Implement proper CDN configuration for static assets including images, CSS, JavaScript, and downloadable files. CDN usage reduces origin server load from crawler requests while improving response times that influence crawl budget allocation. Enable HTTP/2 or HTTP/3 protocols for more efficient resource delivery. Configure compression for text resources (HTML, CSS, JavaScript) to reduce bandwidth and improve transfer speeds. These technical optimizations signal site quality to AI crawlers while ensuring efficient resource usage that allows more comprehensive crawling within crawler budget constraints.

Practical change: An online education platform discovered AI crawlers were consuming 40% of their monthly bandwidth, causing occasional server overload. They implemented Cloudflare CDN with intelligent bot management, upgraded from shared hosting to dedicated VPS, and configured crawl-delay directives to space major crawler requests by 5 seconds. The result was reduced server load by 35% while maintaining full AI crawler access. Crawlers responded by increasing crawl depth rather than request frequency—they indexed more pages per session because improved performance allowed them to work within allocated crawl budgets more efficiently.

Step 8: Implement Continuous Monitoring and Adaptation

Establish systematic monitoring of AI crawler activity and citation performance to enable data-driven optimization. Set up Google Analytics 4 custom reports to track bot traffic specifically from AI crawler user-agents. Implement server log analysis with tools like GoAccess or Splunk to identify crawler access patterns, request volumes, and accessed pages. Monitor which crawlers visit most frequently and which pages they prioritize. Set up automated alerts for unusual crawler activity spikes that might indicate technical issues or changing crawler priorities.

Deploy AI citation monitoring tools to track brand mentions in AI-generated responses. Ahrefs’ Brand Radar tracks mentions in Google’s AI Overviews and other AI-powered features. Semrush’s AI Visibility Toolkit monitors brand presence across multiple AI platforms including ChatGPT, Perplexity, and Gemini. These tools work by running synthetic queries at scale and analyzing resulting AI responses for brand mentions, sentiment, and positioning. Regular monitoring reveals which content gets cited most frequently, which competitor brands appear alongside yours, and how your messaging is interpreted and presented by different AI systems.

Establish quarterly review cycles to analyze crawler and citation data and adjust strategy accordingly. Identify content gaps where competitors receive AI citations but you don’t. Analyze which of your pages generate the most AI crawler interest and replicate successful patterns across similar content. Track changes in crawler behavior patterns—are specific crawlers increasing or decreasing activity on your site? Adjust robots.txt rules, content structure, and optimization priorities based on observed crawler preferences and citation performance. The AI crawler landscape evolves rapidly, requiring continuous adaptation rather than set-and-forget optimization.

Practical change: A consumer electronics retailer implemented monthly AI citation monitoring and discovered ChatGPT never cited their product comparison articles despite comprehensive content. Analysis revealed their comparisons lacked explicit “winner” declarations or clear recommendation statements. They restructured comparison articles to include definitive recommendation sections with explicit statements like “For most users, Product X offers the best value” rather than leaving conclusions ambiguous. Within 60 days, ChatGPT citation rate for their comparison content increased from 0% to 41%, demonstrating how monitoring reveals specific structural issues that affect citation probability.

Step 9: Create Citation-Worthy Content Assets

Develop content specifically designed to become preferred citation sources for AI models. This includes original research studies with quantitative findings, comprehensive guides that definitively explain complex topics, authoritative comparisons with clear methodology, and proprietary data or analysis unavailable elsewhere. AI models preferentially cite sources that provide unique information, clear expertise signals, transparent methodology, and definitive rather than equivocal conclusions. Content that hedges, qualifies excessively, or avoids clear statements gets cited less frequently than content that stakes authoritative positions supported by evidence.

Commission original surveys, industry studies, or data analysis that generate quotable statistics and findings. Even modest survey sample sizes (300-500 respondents) produce original data that AI models cite because alternatives are limited. Present findings with clear statistics, explicit methodology descriptions, and unambiguous interpretation. Format key findings as standalone statements that can be extracted without context: “67% of enterprise IT leaders plan to increase AI infrastructure spending in 2026, according to our November 2025 survey of 450 IT executives.” This specificity and completeness makes the finding citation-ready.

Create comprehensive, authoritative guides that definitively explain important concepts within your domain. These guides should include explicit concept definitions, clear relationship explanations, practical application examples, and expert author credentials. Length matters less than comprehensiveness—a 3,000-word guide that definitively covers a topic earns more citations than a 10,000-word exploration that leaves questions unanswered. AI models prefer sources that confidently explain rather than extensively explore, because they need extractable facts rather than nuanced discourse.

Practical change: A marketing automation company published a comprehensive 4,500-word guide to email deliverability optimization with explicit definitions for technical terms, quantitative analysis of deliverability factors, and clear ranking of effectiveness. They included author credentials highlighting the writer’s 15 years as a deliverability consultant and cited specific testing methodology. Within 90 days, this single guide became their most-cited asset across ChatGPT, Claude, and Perplexity, generating 340% more AI citations than their entire remaining content library combined. The key was authoritative comprehensiveness with extractable, definitive statements supported by credible expertise signals.

Step 10: Establish Cross-Platform Information Consistency

Audit all platforms where information about your brand, products, or key personnel appears and ensure absolute consistency. This includes your website, LinkedIn company and employee profiles, Crunchbase, Wikipedia if applicable, social media profiles, press releases, news coverage, and any directories or databases relevant to your industry. Inconsistent information confuses AI models about which source to trust, reducing citation confidence for all sources. Perfect consistency signals reliability and makes you a safer citation choice.

Create a canonical source document that defines exactly how your organization should be described: official company name, founding date, number of employees (or range), headquarters location, business description, key products/services, leadership team, and major milestones. Distribute this document to all team members responsible for updating external profiles. Review quarterly to ensure all external platforms reflect current, consistent information. When company information changes (new leadership, funding rounds, product launches), update all platforms simultaneously rather than gradually.

Pay particular attention to structured data that different platforms expose. LinkedIn’s organization schema, Crunchbase’s company data, and your website’s Schema.org markup should contain identical information for overlapping fields. AI models often cross-reference multiple sources before citing—finding consistent information across sources increases confidence that the information is accurate. Inconsistency between sources raises red flags that may lead AI models to avoid citation entirely rather than risk spreading inaccurate information. The operational burden of maintaining consistency is significant but the authority benefit is substantial.

Practical change: A B2B SaaS company discovered their employee count was listed as “50-100” on LinkedIn, “75” on Crunchbase, “approximately 80” on their website, and “60+” in press releases. This inconsistency caused Claude to qualify every citation with “approximately” or “reports suggest” rather than confident statements. After establishing a single canonical source document and updating all platforms to show “85 employees” consistently, citation confidence language shifted to definitive statements. AI models stopped qualifying citations with uncertainty markers because cross-source validation now confirmed accuracy. This single change increased “confident citation rate” (unqualified statements) from 23% to 71% across monitored AI platforms.

Recommended Tools

Effective AI crawler optimization and GEO implementation requires specialized tools spanning monitoring, analysis, technical implementation, and content creation. The following tools represent current leaders as of December 2025, though the landscape evolves rapidly as new GEO-specific solutions emerge.

Knowatoa AI Search Console (Free tier available)

Comprehensive AI crawler access auditing that checks your site against 24+ different AI user agents and flags any access issues. Provides real-time alerts when new AI crawlers appear or existing crawlers change behavior patterns. Offers robots.txt testing specific to AI crawlers with detailed recommendations for strategic access control. The free tier covers basic crawler detection and access testing, while paid plans ($49/month) include historical crawler activity tracking and competitive benchmarking against similar sites.

Ahrefs Brand Radar ($99/month add-on)

Monitors brand mentions across Google’s AI Overviews, featured snippets, and other AI-powered search features. Tracks sentiment analysis of how your brand is framed in AI-generated content. Provides competitive comparison showing which competitor brands appear in similar contexts. Historical tracking reveals whether your AI presence is increasing or declining over time. Integrates with existing Ahrefs accounts but requires separate subscription for brand monitoring features.

Semrush AI Visibility Toolkit ($129/month)

Dedicated platform for tracking brand presence across ChatGPT, Perplexity, Gemini, and other major generative AI systems. Runs synthetic queries at scale and analyzes resulting mentions, positioning, and context. Provides actionable recommendations for improving citation frequency based on competitive analysis. Includes content gap analysis showing topics where competitors get cited but your brand doesn’t. The toolkit represents Semrush’s entry into the GEO monitoring space and updates monthly with new features as the category matures.

Perplexity Pro ($20/month)

Essential for testing how your content appears in AI-generated responses. The Pro version provides unlimited searches with access to premium models including GPT-4, Claude, and Gemini. Enables systematic testing of different query formulations to understand citation patterns. Shows source attribution clearly, allowing you to verify which content gets cited and how it’s presented. Useful for competitive research by examining which sources Perplexity cites for queries in your domain.

ChatGPT Plus ($20/month)

Provides access to GPT-4 and ChatGPT’s search functionality for testing citation behavior. Essential for understanding how OpenAI’s systems select and present sources. Enables systematic query testing to verify whether your content appears in responses. Search feature shows explicit source URLs, making it easy to track whether optimization efforts improve citation rates. Operator access (when available) helps understand agentic browsing behavior.

Claude Pro ($20/month)

Access to Anthropic’s Claude with search capabilities for testing citation behavior. Particularly useful for B2B brands as Claude demonstrates strong preference for authoritative business databases and professional content. Enables analysis of how Claude frames and presents your brand versus competitors. The Artifacts feature helps understand how Claude structures information extraction from sources.

Schema.org Validator (Free)

Official structured data testing tool that validates JSON-LD markup accuracy. Identifies errors and warnings that might prevent AI crawlers from correctly interpreting entity definitions. Provides detailed error messages with specific line numbers and property issues. Essential for ensuring structured data implementation follows specification requirements that AI crawlers expect.

Google Search Console (Free)

Provides structured data reports showing markup validation and rich result eligibility. Monitors crawler access issues and server errors that might affect AI crawler access. Offers URL inspection tool for testing individual page crawlability. While focused on Google’s crawler, provides foundation for understanding general crawler accessibility issues that affect AI crawlers similarly.

Screaming Frog SEO Spider ($259/year)

Desktop tool for comprehensive site auditing including semantic HTML structure analysis. Crawls websites to identify heading hierarchy issues, missing Schema.org markup, and structural problems that affect AI crawler indexing. Generates detailed reports showing technical issues at scale across large sites. Custom extraction features enable analysis of how well content matches AI-friendly patterns.

Cloudflare Bot Management ($20/month add-on)

Provides intelligent bot traffic management including AI crawler access control. Offers detailed analytics showing which crawlers access your site and resource consumption patterns. Enables allowlisting verified AI crawler IP ranges while blocking impersonators. Rate limiting features help manage crawler load without completely blocking access. Essential for sites experiencing performance issues from aggressive crawler activity.

Surfer SEO with AI ($119/month)

Content optimization platform with AI-specific features including semantic density analysis and entity definition recommendations. Provides real-time scoring as you write, indicating whether content structure matches AI crawler preferences. Offers competitive content analysis showing how top-cited sources structure similar content. NLP analysis identifies semantic relationships and concept coverage that influence AI citation probability.

Clearscope ($170/month)

Content optimization platform focused on semantic relevance and topic comprehensiveness. Analyzes top-ranking content and AI-cited sources to identify concept coverage gaps. Provides term frequency recommendations balanced for semantic value rather than keyword density. Useful for ensuring content addresses all relevant sub-topics that AI models expect authoritative sources to cover.

PageSpeed Insights (Free)

Google’s page performance analysis tool measuring loading speed and core web vitals that influence crawler efficiency. Provides specific recommendations for improving response times that affect crawl budget allocation. Mobile performance analysis ensures crawlers encounter fast, accessible content regardless of user-agent. Technical optimization recommendations directly impact crawler efficiency and crawl depth.

Sitebulb ($35/month)

Desktop website crawler with advanced visualization of site architecture and technical issues. Generates comprehensive technical SEO audits identifying issues that affect AI crawler access and indexing. Provides visual representations of internal linking patterns that help understand how crawlers discover and prioritize content. Hints feature offers specific recommendations tied to issues found, making it easier to prioritize fixes.

Advantages and Limitations

AI crawler optimization and generative engine optimization present significant advantages for brands seeking visibility in the emerging AI-powered search landscape. The primary advantage is establishing presence in the foundational knowledge layer that shapes how AI systems understand and recommend brands for potentially years to come. Training data indexed by AI crawlers in 2024-2025 forms the baseline knowledge that influences model behavior through multiple generations of fine-tuning and updates. Brands that optimize early gain compounding advantages as their authority signals become embedded in model weights, making them preferred sources for real-time citations even as competition increases. This creates a first-mover advantage more durable than traditional SEO, where rankings fluctuate based on algorithm updates and competitive activity.

The shift toward reference-based metrics rather than traffic-based metrics fundamentally changes success measurement and attribution. Instead of optimizing for clicks and direct traffic, GEO prioritizes being cited, mentioned, and correctly represented in AI-generated responses. This creates opportunities for B2B brands and professional services firms whose value comes from expertise rather than transactional traffic. A consulting firm might receive minimal direct traffic from AI citations but experience substantial brand elevation when Claude or ChatGPT specifically names their partners as domain experts. The value isn’t in the click but in the attribution—being encoded into AI systems as an authoritative source represents a new category of brand equity that traditional metrics fail to capture.

AI crawler optimization reduces dependency on traditional search engine algorithms and their frequent, unpredictable updates. SEO practitioners have spent decades adapting to Google’s ever-changing ranking factors, with major updates sometimes decimating traffic overnight. GEO operates on more stable principles because it aligns with fundamental requirements of how language models process information: semantic clarity, entity definition, authority signals, and structured data. While specific AI models evolve, these foundational requirements remain consistent across systems. Content optimized for AI crawlers works across ChatGPT, Claude, Perplexity, and Gemini simultaneously because all rely on similar semantic processing mechanisms. This creates optimization leverage—improvements benefit visibility across multiple platforms rather than optimizing separately for each.

The emerging category of zero-click search, while challenging for traffic-dependent models, creates significant value for brands that monetize through perception and authority rather than direct response. Professional services, financial services, healthcare, and B2B technology sectors particularly benefit from AI citation even without website visits. When ChatGPT recommends specific law firms for intellectual property work or Claude names particular cybersecurity vendors for enterprise solutions, the brand value comes from the recommendation itself—the AI system has essentially endorsed that brand to its user. This represents a form of earned media more valuable than many paid advertising positions because it comes with the implicit credibility of the AI system’s judgment.

Technical implementation of GEO optimization aligns closely with existing SEO best practices, reducing the learning curve and leveraging existing expertise. Semantic HTML, structured data, clear information architecture, and fast page loads benefit both traditional search engines and AI crawlers. Teams already invested in quality SEO implementation can extend their work to AI optimization without fundamentally new skillsets or infrastructure. Schema.org markup, originally developed to help traditional search engines understand content, now serves an even more critical role helping AI crawlers extract accurate information. This continuity means existing SEO investments continue providing value while extending into the AI search domain.

Despite these advantages, AI crawler optimization and GEO present substantial limitations and challenges that must be acknowledged. The most fundamental limitation is the black box nature of AI model training and citation selection. Unlike traditional search engines that provide relatively transparent ranking signals (backlinks, keyword relevance, page speed), AI systems operate as opaque decision-makers with undisclosed selection criteria. We can observe patterns and test hypotheses, but we cannot know with certainty why one source gets cited over another in specific contexts. This opacity makes optimization partially speculative—we implement practices that correlate with citations without understanding the precise causal mechanisms driving AI selection decisions.

The zero-click search phenomenon, while advantageous for brand perception, fundamentally threatens traffic-dependent business models including advertising-supported content, affiliate marketing, and lead generation systems. When AI provides comprehensive answers without requiring users to visit source websites, the economic model underlying much of the web collapses. Publishers who invested in authoritative content find their information extracted and repurposed by AI systems, eliminating the traffic that justified content creation in the first place. This creates a strategic dilemma: blocking AI crawlers preserves short-term traffic but sacrifices long-term authority, while allowing crawlers enables AI visibility at the expense of direct website engagement. No clear optimal strategy exists for content businesses whose value depends on user attention rather than brand perception.

Attribution and citation behavior varies dramatically across AI platforms, creating fragmentation challenges for brands trying to optimize systematically. ChatGPT rarely provides explicit source URLs unless using search mode, making it difficult to verify which sources informed responses. Perplexity consistently shows sources with numbered citations, providing transparency but raising questions about which sources matter most when multiple are cited. Claude draws heavily from business databases in addition to web content, requiring optimization across different source types. Gemini integrates with Google’s search index but uses distinct citation patterns from traditional search results. This platform fragmentation means optimization that works well for one AI system may be less effective for others, requiring platform-specific strategies that multiply complexity and resource requirements.

The measurement and attribution challenges in GEO substantially exceed those of traditional SEO. Search Console provides clear data on impressions, clicks, and rankings for traditional search. No equivalent comprehensive measurement platform exists for AI citations. Third-party tools like Ahrefs Brand Radar and Semrush’s AI Visibility Toolkit provide partial visibility through synthetic queries, but they can’t capture the full breadth of real user queries and resulting citations. This measurement gap makes it difficult to prove ROI for GEO investments or attribute specific business outcomes to AI optimization efforts. Executives accustomed to clear SEO metrics (organic traffic, keyword rankings, conversion rates) find AI citation metrics vague and circumstantial, making budget allocation challenging.

The pace of AI platform evolution creates strategic uncertainty about optimization priorities. New AI search platforms launch regularly, each with distinct citation patterns and source preferences. Existing platforms undergo frequent updates that change behavior unpredictably. ChatGPT’s introduction of search mode fundamentally changed its citation behavior. Perplexity’s evolution from citation-focused to recommendation-focused altered what optimization approaches work best. Gemini’s integration with Google search created hybrid behaviors different from standalone AI models. This rapid evolution means optimization strategies that work today may become obsolete as platforms mature. The resource investment required to continuously adapt creates ongoing costs that must be weighed against uncertain long-term benefits.

Content creation for AI optimization can conflict with human user experience goals. Content structured for easy AI extraction often reads mechanically—explicit definitions, repeated context, and standalone statements optimize for AI but may bore human readers expecting narrative flow. FAQ sections optimized for AI query matching can feel forced and artificial in content that would flow better without them. Schema.org markup adds code complexity and maintenance burden without visible user-facing benefits. The tension between AI-optimized and human-optimized content creates design challenges where serving one audience potentially degrades experience for the other. Sophisticated content strategies must balance these competing objectives without sacrificing either completely.

The potential for AI misattribution and misinformation represents a significant reputation risk for brands allowing crawler access. AI models sometimes confidently cite sources inaccurately, attribute statements to wrong entities, or combine information from multiple sources in ways that create false impressions. When ChatGPT misattributes a competitor’s pricing to your brand or combines your product description with a competitor’s limitations, you have limited recourse to correct the error. Unlike traditional search where you can contact Google about SERP inaccuracies, AI models provide no clear correction mechanism. Training data errors persist through model generations, making initial misattribution potentially long-lasting. This risk is particularly acute for brands in regulated industries where misinformation could have compliance implications.

Conclusion

The transformation from traditional search indexing to AI-powered knowledge acquisition represents the most fundamental shift in web visibility since search engines emerged in the 1990s. AI crawlers from OpenAI, Anthropic, Meta, and Perplexity are systematically building comprehensive training datasets that will shape how generative AI systems understand, cite, and recommend brands for potentially years to come. The data is unambiguous: GPTBot increased crawling activity by 305% year-over-year, 60% of internet users now rely on AI assistants for search, and 80% of AI crawling serves training purposes that establish foundational model knowledge. Website owners face a strategic inflection point where current optimization decisions directly influence long-term AI visibility, as patterns shared across prompt engineering for AI SEO strategies demonstrate the interconnection between these emerging disciplines.

The practical framework for AI crawler optimization encompasses semantic clarity, entity definition, authority signals, and technical accessibility implemented through methodical execution across content, technical infrastructure, and cross-platform consistency. Success requires allowing strategic crawler access, implementing comprehensive structured data, creating extractable content architecture, building explicit entity authority, optimizing technical performance, and continuously monitoring citation performance through emerging tools like Ahrefs Brand Radar and Semrush’s AI Visibility Toolkit. These aren’t theoretical recommendations but operational requirements demonstrated by organizations like InMotion Hosting, whose systematic optimization increased AI citations by 340% and shifted ChatGPT referral traffic from 2.1% to 12.8% of total referrals.

The competitive advantage in this emerging landscape belongs to brands that recognize the shift from page rank to model perception—from optimizing for where you appear in search results to how you’re encoded in the AI systems generating those results. Traditional SEO remains relevant, but it now serves as foundation for the more fundamental challenge of ensuring AI models understand your brand accurately, cite your expertise appropriately, and recommend your solutions correctly. The first movers in comprehensive AI crawler optimization are establishing authority patterns that compound over time as their citations reinforce their position as preferred sources. Organizations that delay optimization risk permanent disadvantage as training datasets solidify and the window for establishing foundational authority narrows with each model generation.

For more, see: https://aiseofirst.com/prompt-engineering-ai-seo

FAQ

Q: What are AI crawlers and how do they differ from traditional search engine bots?

A: AI crawlers are specialized bots that systematically scan websites to collect data for training large language models or powering real-time AI search results. Unlike traditional crawlers like Googlebot that index content to drive traffic to your site, AI crawlers often gather data to generate direct answers, sometimes bypassing your website entirely. Major AI crawlers include GPTBot (OpenAI), ClaudeBot (Anthropic), PerplexityBot, and Meta-ExternalAgent. They identify themselves through user-agent strings that allow site owners to control access through robots.txt configurations.

Q: How fast is AI crawler traffic growing compared to traditional search crawlers?

A: AI crawler traffic has experienced explosive growth. According to Cloudflare data from May 2024 to May 2025, GPTBot increased its crawling activity by 305%, while overall crawler traffic rose 18%. GPTBot’s share grew from 2.2% to 7.7% of total crawler traffic, jumping from #9 to #3 position. Traditional search crawler Googlebot still leads at 39% share but AI-specific crawlers now represent the fastest-growing segment. Meta-ExternalAgent emerged from near-zero to capture 19% of AI crawler activity within the same period.

Q: Should I block or allow AI crawlers on my website?

A: The decision depends on your business model. If you sell products or services and benefit from brand visibility in AI recommendations, allowing AI crawlers enhances your presence in generative search—especially since 60% of internet users now rely on AI assistants for search. If you monetize primarily through advertising and traffic, blocking may seem attractive since AI often generates zero-click answers. However, blocking training crawlers sacrifices long-term authority for short-term traffic protection. Most businesses benefit from allowing all major AI crawlers while optimizing content structure and quality to maximize citation value. You can control access through robots.txt rules using specific user-agent strings like GPTBot, ClaudeBot, or PerplexityBot.

Q: What practical steps should I take to optimize for AI crawler indexing?

A: Start by ensuring AI crawlers can access your content through proper robots.txt configuration. Implement semantic HTML with clear structure using tags like header, article, section, and aside. Add comprehensive Schema.org structured data including Article, Organization, Person, FAQPage, and entity markup in JSON-LD format. Structure content with direct answers in opening paragraphs, explicit concept definitions, and clear section headers. Create FAQ sections that mirror natural language queries. Monitor your server logs to track which AI crawlers visit your site and which pages they prioritize. Deploy tools like Knowatoa’s AI Search Console to verify crawler access and Ahrefs Brand Radar to track citation performance.

Q: How will AI crawlers change SEO strategy in 2026 and beyond?

A: The shift is fundamental: traditional metrics like click-through rate and SERP position are being replaced by reference rates—how often your content is cited in AI-generated answers—and generative appearance scores that measure prominence within AI responses. Success requires optimizing for being encoded correctly into AI training data through authoritative content, clear entity definitions, strong expertise signals, and comprehensive structured data. The competitive advantage shifts from achieving high page rankings to establishing model perception where AI systems recognize your brand as an authoritative source. Brands must prioritize being present in training datasets now, as these shape model behavior for years and training opportunities decrease as models mature. The optimization focus extends from individual websites to cross-platform consistency across all digital properties that AI models reference.